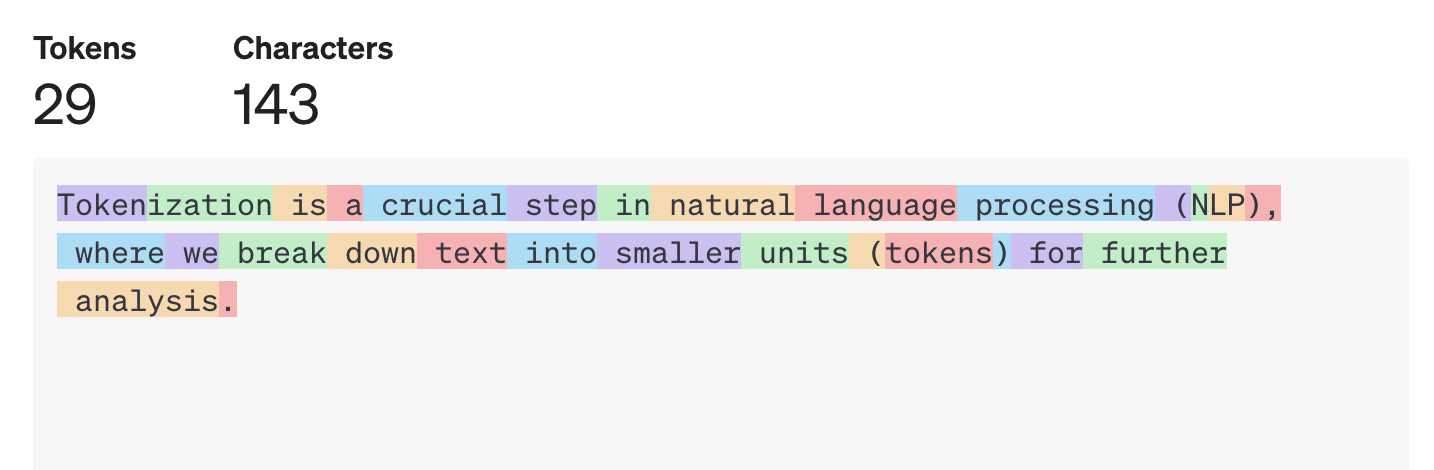

Tokenization is a crucial step in natural language processing (NLP), where we break down text into smaller units (tokens) for further…

Tokenization is a crucial step in natural language processing (NLP), where we break down text into smaller units (tokens) for further…Continue reading on Medium » Read More AI on Medium

#AI

+ There are no comments

Add yours