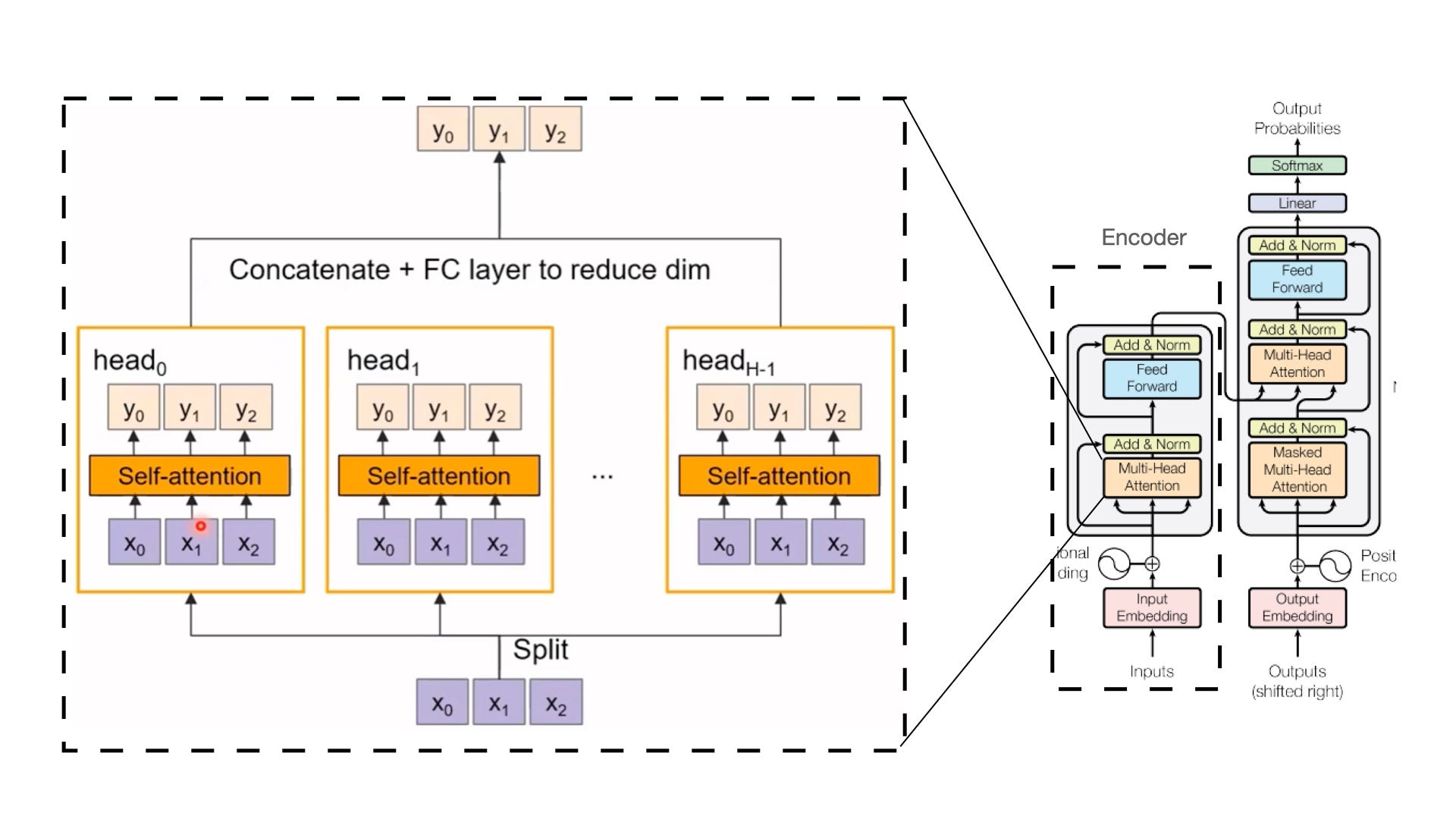

Understanding the self-attention mechanism and its implementation in Transformer models provides valuable insight into why these models are

Understanding the self-attention mechanism and its implementation in Transformer models provides valuable insight into why these models areContinue reading on Medium » Read More AI on Medium

#AI

+ There are no comments

Add yours