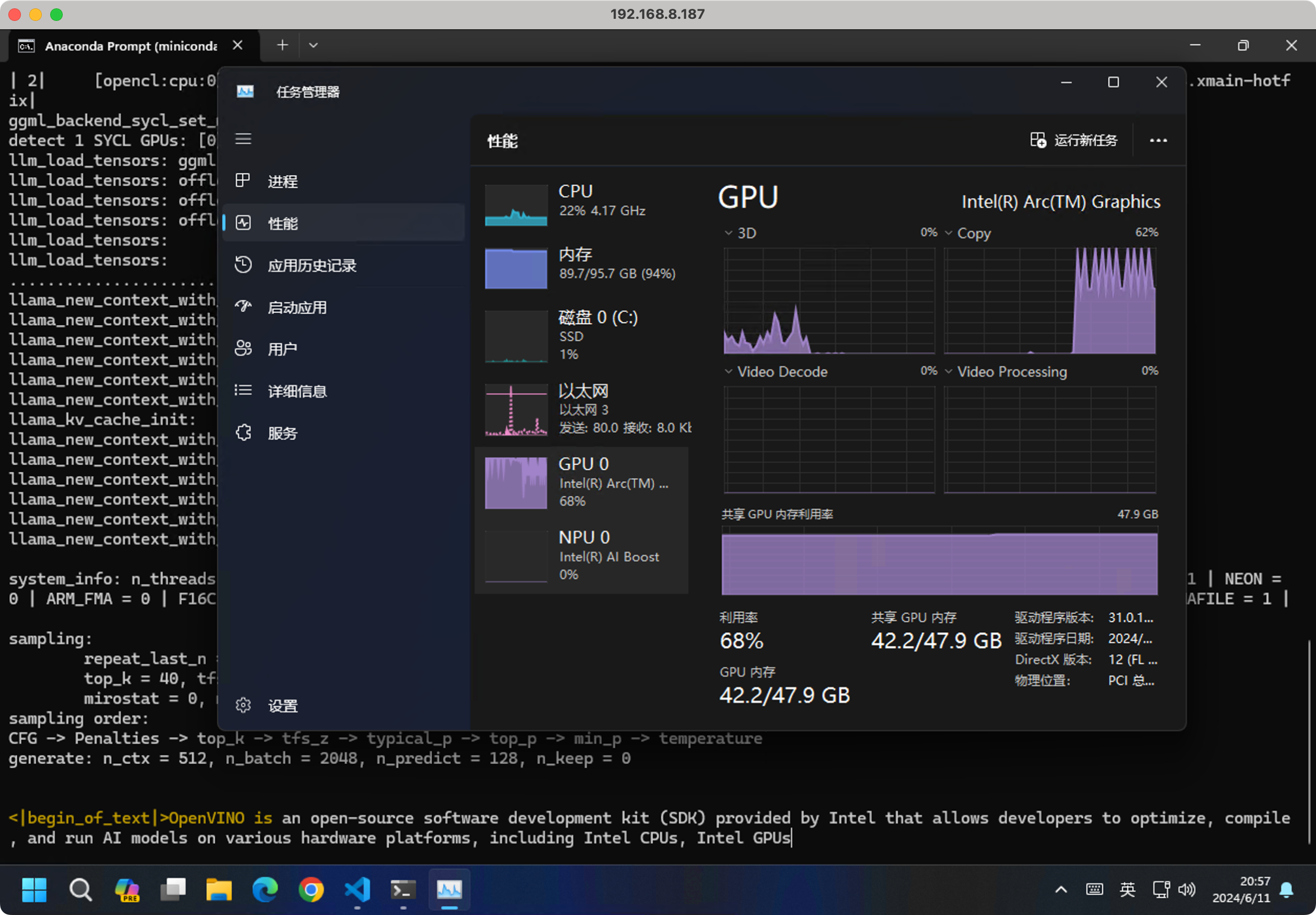

As mentioned in the previous article, Llama.cpp might not be the fastest among the various LLM inference mechanisms provided by Intel.

As mentioned in the previous article, Llama.cpp might not be the fastest among the various LLM inference mechanisms provided by Intel.Continue reading on Medium » Read More Llm on Medium

#AI

+ There are no comments

Add yours