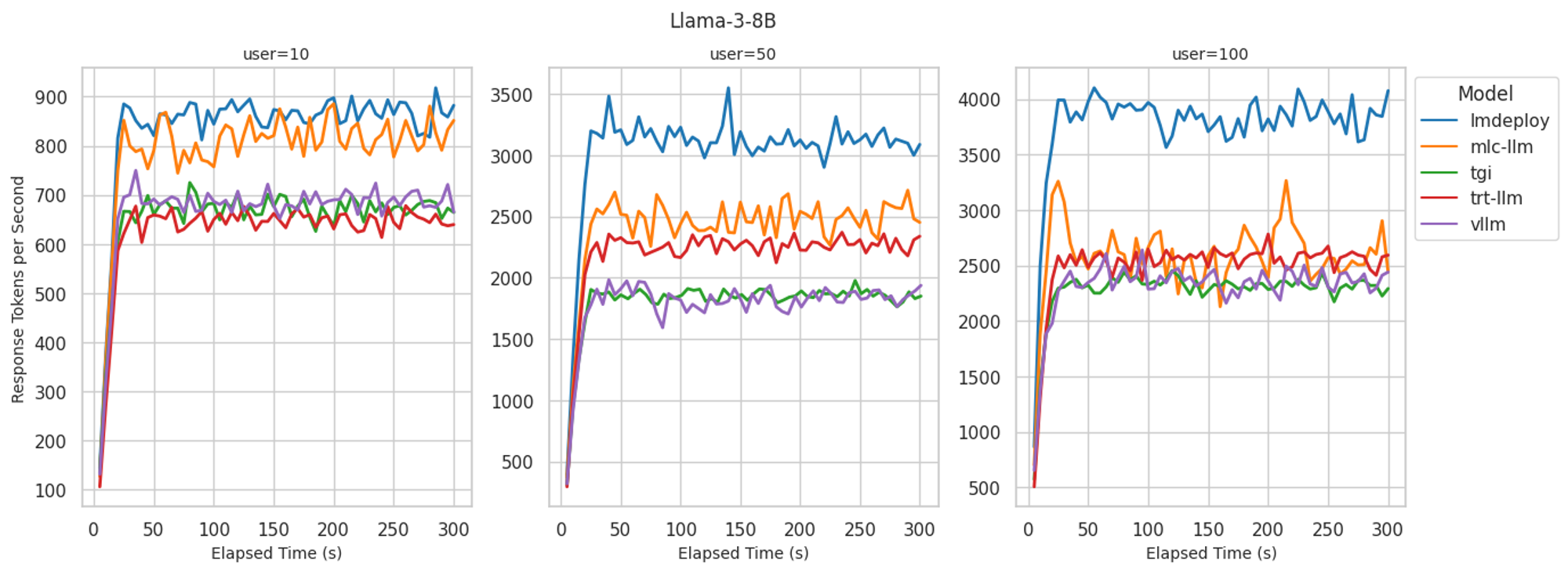

Comparing Llama 3 serving performance on vLLM, LMDeploy, MLC-LLM, TensorRT-LLM, and TGI

Comparing Llama 3 serving performance on vLLM, LMDeploy, MLC-LLM, TensorRT-LLM, and TGIContinue reading on Towards Data Science » Read More AI on Medium

#AI

Comparing Llama 3 serving performance on vLLM, LMDeploy, MLC-LLM, TensorRT-LLM, and TGI

Comparing Llama 3 serving performance on vLLM, LMDeploy, MLC-LLM, TensorRT-LLM, and TGIContinue reading on Towards Data Science » Read More AI on Medium

#AI

+ There are no comments

Add yours