Post Content

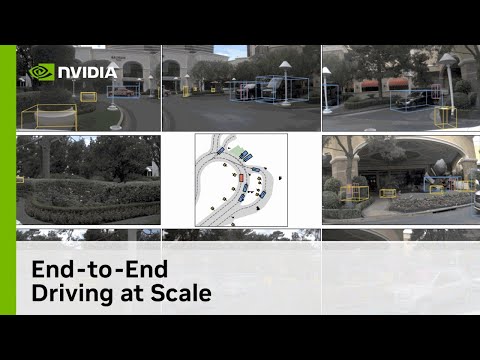

End-to-end autonomous driving is a holistic approach to AV system development in which the system takes in raw sensor data from cameras, radar, and lidar and directly outputs vehicle controls.

Unlike traditional systems that rely on modular designs with separate components—such as detection, tracking, prediction, planning and control—end-to-end driving aims to streamline the process to avoid the deep and cascaded path from perception to planning.

NVIDIA’s end-to-end driving model—Hydra-MDP—combines the components into a single network with a minimalistic design. The planning input comes directly from a bird’s-eye view feature map generated from sensor data.

The NVIDIA Research team’s progress in this area won the CVPR 2024 Autonomous Grand Challenge in the End-to-End Driving at Scale category.

In addition to the real-world data provided in the competition, the model also generalizes to simulation environments with high-fidelity sensors, such as NVIDIA Omniverse, for further development and testing.

00:00:00 – Introducing the end-to-end driving model

00:01:22 – The important role of ego queries

00:01:58 – Tapping into bird’s-eye view features for planning

00:02:03 – The NVIDIA Hydra-MDP solution

00:03:20 – Key element #1: Collecting data across diverse scenarios

00:03:50 – Key element #2: Powerful computing hardware

00:04:06 – Please check out the resources below

Resources:

CVPR 2024 Leaderboard: https://opendrivelab.com/challenge2024/

Hydra-MDP paper: https://arxiv.org/html/2406.06978v1

NVIDIA blog: https://blogs.nvidia.com/blog/auto-research-cvpr-2024/

NVIDIA tech blog: https://developer.nvidia.com/blog/end-to-end-driving-at-scale-with-hydra-mdp/

Watch the full DRIVE Lab series: https://nvda.ws/3LsSgnH

Learn more about DRIVE Labs: https://nvda.ws/36r5c6t

Follow us on social:

Twitter: https://nvda.ws/3LRdkSs

LinkedIn: https://nvda.ws/3wI4kue

#NVIDIADRIVE Read More NVIDIA

#Techno #nvidia

+ There are no comments

Add yours