Introduction

As one of the most advanced machine learning techniques, neural networks excel in handling complex data patterns and making predictions with high accuracy. Neural networks have shown state-of-the-art capabilities in many classification and regression tasks. In the previous release of Predictive Analysis Library (PAL), a function using neural networks called multilayer perceptron (PAL_MULTILAYER_PERCEPTRON) has been provided. From the standpoint of user experience and keeping pace with advancements in neural network technology, this previous function implementation however sometimes falls short in meeting customer performance expectations and requirements for multi-task learning. Therefore, we have introduced a new neural network function, e.g., a Multi-task Multilayer Perceptron (PAL_MLP_MULTI_TASK) in SAP HANA Cloud 2024 Q2 release. Compared to the original function, the new function will provide improvements in the following areas:

Supports built-in multi-task classification scenarioEnhanced early-stop method to avoid model-overfitting issue in neural networksFix output activations for easier parameter tuning and better user experience.More network complexity control techniques and optimization methods.

Multi-Task Learning

Multi-task learning (MTL) in multilayer perceptrons involves training a single neural network to perform multiple related tasks at once. It utilizes shared hidden layers to capture common features across tasks and task-specific output layers for unique predictions. The network is jointly optimized for all tasks, which can enhance generalization and lead to better performance than training separate networks. MTL is efficient as it reduces the total number of parameters needed. It also facilitates the transfer of knowledge between tasks, which is particularly beneficial when tasks are related. Multi-task classification and multi-task regression have a wide range of applications. For instance, multi-task classification can be used for automated multi-field value proposals or pre-filling of forms, such as Sales Order Automation. Multi-task regression can be used for predicting multiple price or sales targets within a single model.

In prior PAL_MULTILAYER_PERCEPTRON function, multi-task learning is only supported in regression scenario. In PAL_MLP_MULTI_TASK function, multi-task learning is supported in both classification and regression tasks.

Example

INSERT INTO PAL_PARAMETER_TBL VALUES (‘DEPENDENT_VARIABLE’, NULL, NULL, ‘LABEL1’);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘DEPENDENT_VARIABLE’, NULL, NULL, ‘LABEL2’);

To use this feature, user needs to define all the targets by DEPENDENT_VARIABLE parameter.

Early Stop Method

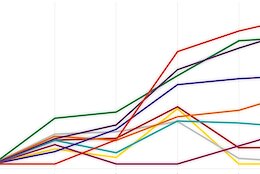

Early stopping is a regularization technique used in training neural networks to prevent overfitting and to hasten the training process. It is a practical and effective method to enhance the generalization capabilities of neural networks by avoiding excessive training that can lead to overfitting. It involves monitoring the model’s performance on a validation set and stopping the training process when the performance begins to degrade or stops improving. The following graph shows how early stop method works in neural networks:

The input data is partitioned into training and validation subsets based on the specified TRAINING_PERCENTAGE. The designated training subset is utilized for model development, while the validation subset serves to assess the model’s performance, providing a measure of validation loss at the conclusion of each training epoch. The training process will be terminated if the predefined stopping criteria are satisfied, which are governed by the parameters outlined below:

EARLY_STOP: specifies whether to use the auto early stopping method. If don’t use, training would stop after MAX_ITERATION is reached.WARMUP_EPOCHS: number of epochs to wait before executing the auto early stopping method.PATIENCE: Number of epochs to wait before ending the training if no improvement is shown.MAX_ITERATION: maximum number of training epochs.

Besides, SAVE_BEST_MODEL parameter can be used to specifies whether to save your model’s weights with minimum loss during training.

Optimized Parameter Configuration

The primary focus of the updates is on the parameters HIDDEN_LAYER_ACTIVE_FUNC and OUTPUT_LAYER_ACTIVE_FUNC. For HIDDEN_LAYER_ACTIVE_FUNC, several unsuitable and obsolete activation functions, such as linear activations, have been phased out.

The parameter OUTPUT_LAYER_ACTIVE_FUNC has been deprecated in the context of multi-task multilayer perceptrons. In classification tasks, the softmax activation function is now standard for the output layer, whereas a linear activation is employed for regression tasks.

Optimization Methods

An optimizer is an algorithm that adjusts the weights of the network in order to minimize a loss function. The choice of optimizer can have a significant impact on the performance and convergence speed of the network.

Consequently, in addition to the Stochastic Gradient Descent (SGD) provided in the prior neural network functions, we have now incorporated more widely used optimizers, such as RMSprop, Adam, and AdaGrad.

Example

INSERT INTO PAL_PARAMETER_TBL VALUES (‘OPTIMIZER’, 2, NULL, NULL);

Network Complexity Control Techniques

Batch Normalization

Batch normalization is a technique that significantly accelerates neural network training by stabilizing the distribution of inputs to layers, which helps in achieving faster convergence. It reduces the model’s sensitivity to weight initialization, allowing for more efficient training from various starting points and also enables the use of higher learning rates. Additionally, batch normalization provides a regularization effect, which can help in generalizing the model better and reducing the likelihood of overfitting.

Example

INSERT INTO PAL_PARAMETER_TBL VALUES (‘USE_BATCHNORM’, 1, NULL, NULL);

Dropout

Dropout is a powerful regularization technique that prevents overfitting in neural networks by randomly deactivating a subset of neurons during training, which encourages the network to develop more robust features. This method promotes redundancy in the network, as it ensures that no single neuron can dominate the learning process, thereby improving the model’s ability to generalize from the training data to unseen data.

Example:

INSERT INTO PAL_PARAMETER_TBL VALUES (‘ DROPOUT_PROB’, NULL, 0.1, NULL);

Efficiency and Accuracy Measures

In this section, we present the time cost and accuracy outcomes derived from our evaluations on various classification and regression datasets.

Classification

Cover Type dataset is part of the UCI Machine Learning Repository, contains tree observations from four areas of the Roosevelt National Forest in Colorado. This dataset has 581012 instances, containing 54 features and a target. 15120 of them are using for training, the remaining are used for validation.

Function Name

Parameter configuration

Metric on Evaluation Data

Time cost

PAL_MULTILAYER_PERCEPTRON

hidden_layer_size: [60] * 3

activation: relu

output_activation: linear

training_style: batch

learning_rate: 0.01

max_iteration: 500

Accuracy: 74.03%

Training:

444.9s

PAL_MLP_MULTI_TASK

hidden_layer_size: [60] * 3

batch_size: 1024

max_iteration: 500

patience: 5

training_percentage: 1.0

learning_rate: 0.005

Accuracy: 74.5%

Training:

94.7s

Code Sample

SET SCHEMA DM_PAL;

DROP TABLE PAL_MLP_CLS_PRE_MODEL_TBL;

CREATE COLUMN TABLE PAL_MLP_CLS_PRE_MODEL_TBL(

“ROW_INDEX” INTEGER,

“PART_INDEX” INTEGER,

“MODEL_CONTENT” NVARCHAR(5000)

);

DROP TABLE PAL_MLP_CLS_MODEL_TBL;

CREATE COLUMN TABLE PAL_MLP_CLS_MODEL_TBL(

“ROW_INDEX” INTEGER,

“PART_INDEX” INTEGER,

“MODEL_CONTENT” NVARCHAR(5000)

);

DROP TABLE PAL_MLP_LOG_TBL;

CREATE COLUMN TABLE PAL_MLP_LOG_TBL (

“ITERATION” INTEGER,

“ERROR” DOUBLE

);

DROP TABLE PAL_MLP_STAT_TBL;

CREATE COLUMN TABLE PAL_MLP_STAT_TBL (

“STAT_NAME” NVARCHAR(256),

“STAT_VALUE” NVARCHAR(1000)

);

DROP TABLE PAL_PARAMETER_TBL;

CREATE COLUMN TABLE PAL_PARAMETER_TBL(

“PARAM_NAME” NVARCHAR(256),

“INT_VALUE” INTEGER,

“DOUBLE_VALUE” DOUBLE,

“STRING_VALUE” NVARCHAR(1000)

);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HAS_ID’, 1, NULL, Null);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HIDDEN_LAYER_SIZE’, NULL, NULL, ’60, 60, 60′);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘MAX_ITERATION’, 1000, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HIDDEN_LAYER_ACTIVE_FUNC’, 2, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘EARLY_STOP’, 1, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘PATIENCE’, 5, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘LEARNING_RATE’, NULL, 0.005, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘DEPENDENT_VARIABLE’, NULL, NULL, ‘LABEL’);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘BATCH_SIZE’, 1024, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘NORMALIZATION’, 1, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘SEED’, 1234, NULL, NULL);

DO BEGIN

lt_data = SELECT * FROM PAL_TRAIN_COVERTYPE_DATA_TBL;

lt_param = SELECT * FROM PAL_PARAMETER_TBL;

lt_premodel = SELECT * FROM PAL_MLP_CLS_PRE_MODEL_TBL;

CALL _SYS_AFL.PAL_MLP_MULTI_TASK(:lt_data, :lt_param, :lt_premodel, lt_model, lt_log, lt_stat, lt_opt, lt_ph);

INSERT INTO PAL_MLP_CLS_MODEL_TBL SELECT * FROM :lt_model;

INSERT INTO PAL_MLP_LOG_TBL SELECT * FROM :lt_log;

INSERT INTO PAL_MLP_STAT_TBL SELECT * FROM :lt_stat;

SELECT * FROM PAL_MLP_CLS_MODEL_TBL;

SELECT * FROM PAL_MLP_LOG_TBL;

SELECT * FROM PAL_MLP_STAT_TBL;

END;

DROP TABLE #PAL_PARAMETER_TBL;

CREATE LOCAL TEMPORARY COLUMN TABLE #PAL_PARAMETER_TBL(

“PARAM_NAME” NVARCHAR(256),

“INT_VALUE” INTEGER,

“DOUBLE_VALUE” DOUBLE,

“STRING_VALUE” NVARCHAR(1000)

);

CALL _SYS_AFL.PAL_MLP_MULTI_TASK_PREDICT(PAL_PREDICT_COVERTYPE_DATA_TBL, “#PAL_PARAMETER_TBL”, PAL_MLP_CLS_MODEL_TBL, ?, ?);

Regression

Appliance Energy dataset is also from the UCI Machine Learning Repository, whhich includes variables related to home appliances, weather patterns, and other environmental elements. Its main goal is to predict a household’s energy usage based on the input features. This dataset has 19735 instances, containing 28 features and a target. 75% of them are using for training, 25% are used for validation.

Function Name

Parameter configuration

Metric on Evaluation Data

Time cost

PAL_MULTILAYER_PERCEPTRON

hidden_layer_size: [100]

activation: relu

output_activation: linear

training_style: batch

max_iteration: 1000

learning_rate: 0.001

R2 ~ 0.42

Training:

218.9s

PAL_MLP_MULTI_TASK

hidden_layer_size: [100] * 2

batch_size: 1024

max_iteration: 500

patience: 5

training_percentage: 1.0

learning_rate: 0.01

R2 ~ 0.44

Training: 54s

Code Sample

SET SCHEMA DM_PAL;

DROP TABLE PAL_MLP_REG_PRE_MODEL_TBL;

CREATE COLUMN TABLE PAL_MLP_REG_PRE_MODEL_TBL(

“ROW_INDEX” INTEGER,

“PART_INDEX” INTEGER,

“MODEL_CONTENT” NVARCHAR(5000)

);

DROP TABLE PAL_MLP_REG_MODEL_TBL;

CREATE COLUMN TABLE PAL_MLP_REG_MODEL_TBL(

“ROW_INDEX” INTEGER,

“PART_INDEX” INTEGER,

“MODEL_CONTENT” NVARCHAR(5000)

);

DROP TABLE PAL_MLP_LOG_TBL;

CREATE COLUMN TABLE PAL_MLP_LOG_TBL(

“ITERATION” INTEGER,

“ERROR” DOUBLE

);

DROP TABLE PAL_MLP_STAT_TBL;

CREATE COLUMN TABLE PAL_MLP_STAT_TBL (

“STAT_NAME” NVARCHAR(256),

“STAT_VALUE” NVARCHAR(1000)

);

DROP TABLE PAL_PARAMETER_TBL;

CREATE COLUMN TABLE PAL_PARAMETER_TBL(

“PARAM_NAME” NVARCHAR(256),

“INT_VALUE” INTEGER,

“DOUBLE_VALUE” DOUBLE,

“STRING_VALUE” NVARCHAR(1000)

);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HAS_ID’, 1, NULL, Null);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘FUNCTIONALITY’, 1, NULL, Null);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘BATCH_SIZE’, 1024, NULL, Null);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HIDDEN_LAYER_SIZE’, NULL, NULL, ‘100, 100’);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HIDDEN_LAYER_ACTIVE_FUNC’, 2, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘MAX_ITERATION’, 1000, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘LEARNING_RATE’, NULL, 0.2, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘SEED’, 1234, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘PATIENCE’, 5, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘OPTIMIZER’, 2, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘LEARNING_RATE’, NULL, 0.01, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘TRAINING_PERCENTAGE’, NULL, 1, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘NORMALIZATION’, 1, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘DEPENDENT_VARIABLE’, NULL, NULL, ‘Appliances’);

DO BEGIN

lt_data = SELECT * FROM PAL_TRAIN_ENERGY_DATA_TBL;

lt_param = SELECT * FROM PAL_PARAMETER_TBL;

lt_premodel = SELECT * FROM PAL_MLP_REG_PRE_MODEL_TBL;

CALL _SYS_AFL.PAL_MLP_MULTI_TASK(:lt_data, :lt_param, :lt_premodel, lt_model, lt_log, lt_stat, lt_opt, lt_ph);

INSERT INTO PAL_MLP_REG_MODEL_TBL SELECT * FROM :lt_model;

INSERT INTO PAL_MLP_LOG_TBL SELECT * FROM :lt_log;

INSERT INTO PAL_MLP_STAT_TBL SELECT * FROM :lt_stat;

SELECT * FROM PAL_MLP_REG_MODEL_TBL;

SELECT * FROM PAL_MLP_LOG_TBL;

SELECT * FROM PAL_MLP_STAT_TBL;

END;

DROP TABLE #PAL_PARAMETER_TBL;

CREATE LOCAL TEMPORARY COLUMN TABLE #PAL_PARAMETER_TBL(

“PARAM_NAME” NVARCHAR(256),

“INT_VALUE” INTEGER,

“DOUBLE_VALUE” DOUBLE,

“STRING_VALUE” NVARCHAR(1000)

);

CALL _SYS_AFL.PAL_MLP_MULTI_TASK_PREDICT(PAL_PREDICT_ENERGY_DATA_TBL, “#PAL_PARAMETER_TBL”, PAL_MLP_CLS_MODEL_TBL, ?, ?);

From the results, it is evident that the new neural network implementation has achieved better evaluation metric results and faster processing speeds.

Summary

In comparison to previous neural network implementations, PAL_MLP_MULTI_TASK now supports multi-task learning, adeptly handling both classification and regression tasks. It introduces an early stopping method designed to mitigate the challenges of underfitting and overfitting. The incorporation of fixed activation parameters streamlines the process of parameter tuning, enhancing the overall user experience. Furthermore, PAL_MLP_MULTI_TASK offers an expanded selection of optimizers and advanced network control techniques, providing users with greater flexibility and control in their modeling endeavors. Lastly, PAL_MLP_MULTI_TASK has demonstrated superior performance, delivering improved evaluation outcomes and accelerated training speeds on our validation datasets.

Useful Links

Install the Python Machine Learning client for SAP HANA from the pypi public repository: hana-mlHANA Predictive Analysis Library DocumentationOther blog posts on hana-ml:

Global Explanation Capabilities in SAP HANA Machine Learning

Exploring ML Explainability in SAP HANA PAL – Classification and Regression

Fairness in Machine Learning – A New Feature in SAP HANA Cloud PAL

Outlier Detection using Statistical Tests in Python Machine Learning Client for SAP HANA

Outlier Detection by Clustering using Python Machine Learning Client for SAP HANA

Outlier Detection with One-class Classification using Python Machine Learning Client for SAP HANA

Python Machine Learning Client for SAP HANA

Import multiple excel files into a single SAP HANA table

COPD study, explanation and interpretability with Python machine learning client for SAP HANA

Model Storage with Python Machine Learning Client for SAP HANA

Identification of Seasonality in Time Series with Python Machine Learning Client for SAP HANA

IntroductionAs one of the most advanced machine learning techniques, neural networks excel in handling complex data patterns and making predictions with high accuracy. Neural networks have shown state-of-the-art capabilities in many classification and regression tasks. In the previous release of Predictive Analysis Library (PAL), a function using neural networks called multilayer perceptron (PAL_MULTILAYER_PERCEPTRON) has been provided. From the standpoint of user experience and keeping pace with advancements in neural network technology, this previous function implementation however sometimes falls short in meeting customer performance expectations and requirements for multi-task learning. Therefore, we have introduced a new neural network function, e.g., a Multi-task Multilayer Perceptron (PAL_MLP_MULTI_TASK) in SAP HANA Cloud 2024 Q2 release. Compared to the original function, the new function will provide improvements in the following areas:Supports built-in multi-task classification scenarioEnhanced early-stop method to avoid model-overfitting issue in neural networksFix output activations for easier parameter tuning and better user experience.More network complexity control techniques and optimization methods.Multi-Task LearningMulti-task learning (MTL) in multilayer perceptrons involves training a single neural network to perform multiple related tasks at once. It utilizes shared hidden layers to capture common features across tasks and task-specific output layers for unique predictions. The network is jointly optimized for all tasks, which can enhance generalization and lead to better performance than training separate networks. MTL is efficient as it reduces the total number of parameters needed. It also facilitates the transfer of knowledge between tasks, which is particularly beneficial when tasks are related. Multi-task classification and multi-task regression have a wide range of applications. For instance, multi-task classification can be used for automated multi-field value proposals or pre-filling of forms, such as Sales Order Automation. Multi-task regression can be used for predicting multiple price or sales targets within a single model. In prior PAL_MULTILAYER_PERCEPTRON function, multi-task learning is only supported in regression scenario. In PAL_MLP_MULTI_TASK function, multi-task learning is supported in both classification and regression tasks.ExampleINSERT INTO PAL_PARAMETER_TBL VALUES (‘DEPENDENT_VARIABLE’, NULL, NULL, ‘LABEL1’);INSERT INTO PAL_PARAMETER_TBL VALUES (‘DEPENDENT_VARIABLE’, NULL, NULL, ‘LABEL2’);To use this feature, user needs to define all the targets by DEPENDENT_VARIABLE parameter.Early Stop MethodEarly stopping is a regularization technique used in training neural networks to prevent overfitting and to hasten the training process. It is a practical and effective method to enhance the generalization capabilities of neural networks by avoiding excessive training that can lead to overfitting. It involves monitoring the model’s performance on a validation set and stopping the training process when the performance begins to degrade or stops improving. The following graph shows how early stop method works in neural networks: The input data is partitioned into training and validation subsets based on the specified TRAINING_PERCENTAGE. The designated training subset is utilized for model development, while the validation subset serves to assess the model’s performance, providing a measure of validation loss at the conclusion of each training epoch. The training process will be terminated if the predefined stopping criteria are satisfied, which are governed by the parameters outlined below:EARLY_STOP: specifies whether to use the auto early stopping method. If don’t use, training would stop after MAX_ITERATION is reached.WARMUP_EPOCHS: number of epochs to wait before executing the auto early stopping method.PATIENCE: Number of epochs to wait before ending the training if no improvement is shown.MAX_ITERATION: maximum number of training epochs.Besides, SAVE_BEST_MODEL parameter can be used to specifies whether to save your model’s weights with minimum loss during training.Optimized Parameter Configuration The primary focus of the updates is on the parameters HIDDEN_LAYER_ACTIVE_FUNC and OUTPUT_LAYER_ACTIVE_FUNC. For HIDDEN_LAYER_ACTIVE_FUNC, several unsuitable and obsolete activation functions, such as linear activations, have been phased out.The parameter OUTPUT_LAYER_ACTIVE_FUNC has been deprecated in the context of multi-task multilayer perceptrons. In classification tasks, the softmax activation function is now standard for the output layer, whereas a linear activation is employed for regression tasks.Optimization MethodsAn optimizer is an algorithm that adjusts the weights of the network in order to minimize a loss function. The choice of optimizer can have a significant impact on the performance and convergence speed of the network.Consequently, in addition to the Stochastic Gradient Descent (SGD) provided in the prior neural network functions, we have now incorporated more widely used optimizers, such as RMSprop, Adam, and AdaGrad.ExampleINSERT INTO PAL_PARAMETER_TBL VALUES (‘OPTIMIZER’, 2, NULL, NULL);Network Complexity Control Techniques Batch NormalizationBatch normalization is a technique that significantly accelerates neural network training by stabilizing the distribution of inputs to layers, which helps in achieving faster convergence. It reduces the model’s sensitivity to weight initialization, allowing for more efficient training from various starting points and also enables the use of higher learning rates. Additionally, batch normalization provides a regularization effect, which can help in generalizing the model better and reducing the likelihood of overfitting.ExampleINSERT INTO PAL_PARAMETER_TBL VALUES (‘USE_BATCHNORM’, 1, NULL, NULL);DropoutDropout is a powerful regularization technique that prevents overfitting in neural networks by randomly deactivating a subset of neurons during training, which encourages the network to develop more robust features. This method promotes redundancy in the network, as it ensures that no single neuron can dominate the learning process, thereby improving the model’s ability to generalize from the training data to unseen data.Example:INSERT INTO PAL_PARAMETER_TBL VALUES (‘ DROPOUT_PROB’, NULL, 0.1, NULL);Efficiency and Accuracy MeasuresIn this section, we present the time cost and accuracy outcomes derived from our evaluations on various classification and regression datasets.Classification Cover Type datasetCover Type dataset is part of the UCI Machine Learning Repository, contains tree observations from four areas of the Roosevelt National Forest in Colorado. This dataset has 581012 instances, containing 54 features and a target. 15120 of them are using for training, the remaining are used for validation.Function NameParameter configurationMetric on Evaluation DataTime costPAL_MULTILAYER_PERCEPTRONhidden_layer_size: [60] * 3activation: reluoutput_activation: lineartraining_style: batchlearning_rate: 0.01max_iteration: 500 Accuracy: 74.03% Training:444.9sPAL_MLP_MULTI_TASKhidden_layer_size: [60] * 3batch_size: 1024max_iteration: 500patience: 5training_percentage: 1.0learning_rate: 0.005 Accuracy: 74.5%Training:94.7sCode Sample SET SCHEMA DM_PAL;

DROP TABLE PAL_MLP_CLS_PRE_MODEL_TBL;

CREATE COLUMN TABLE PAL_MLP_CLS_PRE_MODEL_TBL(

“ROW_INDEX” INTEGER,

“PART_INDEX” INTEGER,

“MODEL_CONTENT” NVARCHAR(5000)

);

DROP TABLE PAL_MLP_CLS_MODEL_TBL;

CREATE COLUMN TABLE PAL_MLP_CLS_MODEL_TBL(

“ROW_INDEX” INTEGER,

“PART_INDEX” INTEGER,

“MODEL_CONTENT” NVARCHAR(5000)

);

DROP TABLE PAL_MLP_LOG_TBL;

CREATE COLUMN TABLE PAL_MLP_LOG_TBL (

“ITERATION” INTEGER,

“ERROR” DOUBLE

);

DROP TABLE PAL_MLP_STAT_TBL;

CREATE COLUMN TABLE PAL_MLP_STAT_TBL (

“STAT_NAME” NVARCHAR(256),

“STAT_VALUE” NVARCHAR(1000)

);

DROP TABLE PAL_PARAMETER_TBL;

CREATE COLUMN TABLE PAL_PARAMETER_TBL(

“PARAM_NAME” NVARCHAR(256),

“INT_VALUE” INTEGER,

“DOUBLE_VALUE” DOUBLE,

“STRING_VALUE” NVARCHAR(1000)

);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HAS_ID’, 1, NULL, Null);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HIDDEN_LAYER_SIZE’, NULL, NULL, ’60, 60, 60′);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘MAX_ITERATION’, 1000, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HIDDEN_LAYER_ACTIVE_FUNC’, 2, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘EARLY_STOP’, 1, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘PATIENCE’, 5, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘LEARNING_RATE’, NULL, 0.005, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘DEPENDENT_VARIABLE’, NULL, NULL, ‘LABEL’);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘BATCH_SIZE’, 1024, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘NORMALIZATION’, 1, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘SEED’, 1234, NULL, NULL);

DO BEGIN

lt_data = SELECT * FROM PAL_TRAIN_COVERTYPE_DATA_TBL;

lt_param = SELECT * FROM PAL_PARAMETER_TBL;

lt_premodel = SELECT * FROM PAL_MLP_CLS_PRE_MODEL_TBL;

CALL _SYS_AFL.PAL_MLP_MULTI_TASK(:lt_data, :lt_param, :lt_premodel, lt_model, lt_log, lt_stat, lt_opt, lt_ph);

INSERT INTO PAL_MLP_CLS_MODEL_TBL SELECT * FROM :lt_model;

INSERT INTO PAL_MLP_LOG_TBL SELECT * FROM :lt_log;

INSERT INTO PAL_MLP_STAT_TBL SELECT * FROM :lt_stat;

SELECT * FROM PAL_MLP_CLS_MODEL_TBL;

SELECT * FROM PAL_MLP_LOG_TBL;

SELECT * FROM PAL_MLP_STAT_TBL;

END;

DROP TABLE #PAL_PARAMETER_TBL;

CREATE LOCAL TEMPORARY COLUMN TABLE #PAL_PARAMETER_TBL(

“PARAM_NAME” NVARCHAR(256),

“INT_VALUE” INTEGER,

“DOUBLE_VALUE” DOUBLE,

“STRING_VALUE” NVARCHAR(1000)

);

CALL _SYS_AFL.PAL_MLP_MULTI_TASK_PREDICT(PAL_PREDICT_COVERTYPE_DATA_TBL, “#PAL_PARAMETER_TBL”, PAL_MLP_CLS_MODEL_TBL, ?, ?); RegressionAppliance Energy dataset Appliance Energy dataset is also from the UCI Machine Learning Repository, whhich includes variables related to home appliances, weather patterns, and other environmental elements. Its main goal is to predict a household’s energy usage based on the input features. This dataset has 19735 instances, containing 28 features and a target. 75% of them are using for training, 25% are used for validation.Function NameParameter configurationMetric on Evaluation DataTime costPAL_MULTILAYER_PERCEPTRONhidden_layer_size: [100]activation: reluoutput_activation: lineartraining_style: batchmax_iteration: 1000learning_rate: 0.001 R2 ~ 0.42Training:218.9sPAL_MLP_MULTI_TASKhidden_layer_size: [100] * 2batch_size: 1024max_iteration: 500patience: 5training_percentage: 1.0learning_rate: 0.01 R2 ~ 0.44Training: 54sCode Sample SET SCHEMA DM_PAL;

DROP TABLE PAL_MLP_REG_PRE_MODEL_TBL;

CREATE COLUMN TABLE PAL_MLP_REG_PRE_MODEL_TBL(

“ROW_INDEX” INTEGER,

“PART_INDEX” INTEGER,

“MODEL_CONTENT” NVARCHAR(5000)

);

DROP TABLE PAL_MLP_REG_MODEL_TBL;

CREATE COLUMN TABLE PAL_MLP_REG_MODEL_TBL(

“ROW_INDEX” INTEGER,

“PART_INDEX” INTEGER,

“MODEL_CONTENT” NVARCHAR(5000)

);

DROP TABLE PAL_MLP_LOG_TBL;

CREATE COLUMN TABLE PAL_MLP_LOG_TBL(

“ITERATION” INTEGER,

“ERROR” DOUBLE

);

DROP TABLE PAL_MLP_STAT_TBL;

CREATE COLUMN TABLE PAL_MLP_STAT_TBL (

“STAT_NAME” NVARCHAR(256),

“STAT_VALUE” NVARCHAR(1000)

);

DROP TABLE PAL_PARAMETER_TBL;

CREATE COLUMN TABLE PAL_PARAMETER_TBL(

“PARAM_NAME” NVARCHAR(256),

“INT_VALUE” INTEGER,

“DOUBLE_VALUE” DOUBLE,

“STRING_VALUE” NVARCHAR(1000)

);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HAS_ID’, 1, NULL, Null);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘FUNCTIONALITY’, 1, NULL, Null);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘BATCH_SIZE’, 1024, NULL, Null);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HIDDEN_LAYER_SIZE’, NULL, NULL, ‘100, 100’);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘HIDDEN_LAYER_ACTIVE_FUNC’, 2, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘MAX_ITERATION’, 1000, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘LEARNING_RATE’, NULL, 0.2, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘SEED’, 1234, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘PATIENCE’, 5, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘OPTIMIZER’, 2, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘LEARNING_RATE’, NULL, 0.01, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘TRAINING_PERCENTAGE’, NULL, 1, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘NORMALIZATION’, 1, NULL, NULL);

INSERT INTO PAL_PARAMETER_TBL VALUES (‘DEPENDENT_VARIABLE’, NULL, NULL, ‘Appliances’);

DO BEGIN

lt_data = SELECT * FROM PAL_TRAIN_ENERGY_DATA_TBL;

lt_param = SELECT * FROM PAL_PARAMETER_TBL;

lt_premodel = SELECT * FROM PAL_MLP_REG_PRE_MODEL_TBL;

CALL _SYS_AFL.PAL_MLP_MULTI_TASK(:lt_data, :lt_param, :lt_premodel, lt_model, lt_log, lt_stat, lt_opt, lt_ph);

INSERT INTO PAL_MLP_REG_MODEL_TBL SELECT * FROM :lt_model;

INSERT INTO PAL_MLP_LOG_TBL SELECT * FROM :lt_log;

INSERT INTO PAL_MLP_STAT_TBL SELECT * FROM :lt_stat;

SELECT * FROM PAL_MLP_REG_MODEL_TBL;

SELECT * FROM PAL_MLP_LOG_TBL;

SELECT * FROM PAL_MLP_STAT_TBL;

END;

DROP TABLE #PAL_PARAMETER_TBL;

CREATE LOCAL TEMPORARY COLUMN TABLE #PAL_PARAMETER_TBL(

“PARAM_NAME” NVARCHAR(256),

“INT_VALUE” INTEGER,

“DOUBLE_VALUE” DOUBLE,

“STRING_VALUE” NVARCHAR(1000)

);

CALL _SYS_AFL.PAL_MLP_MULTI_TASK_PREDICT(PAL_PREDICT_ENERGY_DATA_TBL, “#PAL_PARAMETER_TBL”, PAL_MLP_CLS_MODEL_TBL, ?, ?); From the results, it is evident that the new neural network implementation has achieved better evaluation metric results and faster processing speeds.SummaryIn comparison to previous neural network implementations, PAL_MLP_MULTI_TASK now supports multi-task learning, adeptly handling both classification and regression tasks. It introduces an early stopping method designed to mitigate the challenges of underfitting and overfitting. The incorporation of fixed activation parameters streamlines the process of parameter tuning, enhancing the overall user experience. Furthermore, PAL_MLP_MULTI_TASK offers an expanded selection of optimizers and advanced network control techniques, providing users with greater flexibility and control in their modeling endeavors. Lastly, PAL_MLP_MULTI_TASK has demonstrated superior performance, delivering improved evaluation outcomes and accelerated training speeds on our validation datasets.Useful LinksInstall the Python Machine Learning client for SAP HANA from the pypi public repository: hana-mlHANA Predictive Analysis Library DocumentationOther blog posts on hana-ml: Global Explanation Capabilities in SAP HANA Machine LearningExploring ML Explainability in SAP HANA PAL – Classification and RegressionFairness in Machine Learning – A New Feature in SAP HANA Cloud PALA Multivariate Time Series Modeling and Forecasting Guide with Python Machine Learning Client for SA…Outlier Detection using Statistical Tests in Python Machine Learning Client for SAP HANAOutlier Detection by Clustering using Python Machine Learning Client for SAP HANAAnomaly Detection in Time-Series using Seasonal Decomposition in Python Machine Learning Client for …Outlier Detection with One-class Classification using Python Machine Learning Client for SAP HANALearning from Labeled Anomalies for Efficient Anomaly Detection using Python Machine Learning Client…Python Machine Learning Client for SAP HANAImport multiple excel files into a single SAP HANA tableCOPD study, explanation and interpretability with Python machine learning client for SAP HANAModel Storage with Python Machine Learning Client for SAP HANAIdentification of Seasonality in Time Series with Python Machine Learning Client for SAP HANA Read More Technology Blogs by SAP articles

#SAP

#SAPTechnologyblog

+ There are no comments

Add yours