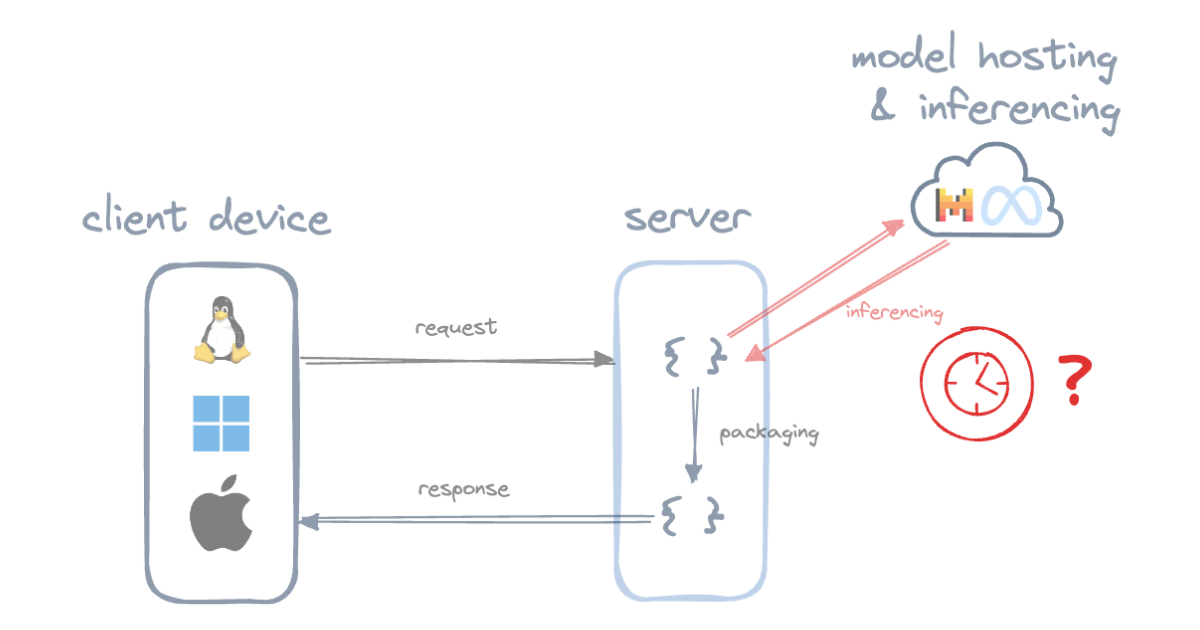

Inference speed for AI products is one of, if not THE most important aspect of any LLM based application.

Inference speed for AI products is one of, if not THE most important aspect of any LLM based application.Continue reading on Medium » Read More AI on Medium

#AI

+ There are no comments

Add yours