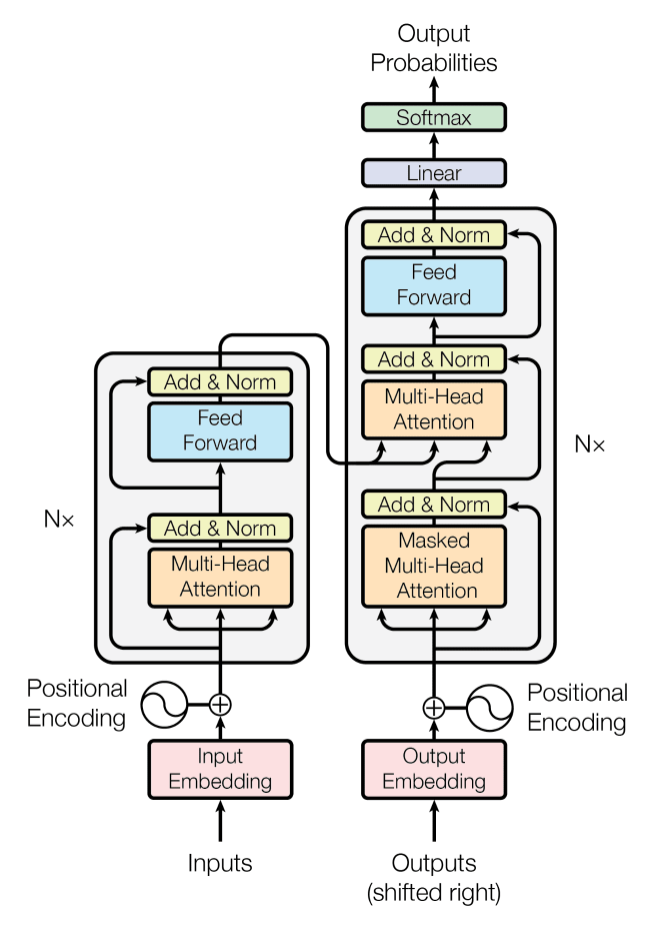

This article will guide you through self-attention mechanisms, a core component in transformer architectures, and large language models…

This article will guide you through self-attention mechanisms, a core component in transformer architectures, and large language models…Continue reading on Medium » Read More Llm on Medium

#AI