In this series of blogs, I will showcase how to integrate SAP AI Core services into SAP Mobile Development Kit (MDK) to develop mobile applications with AI capabilities.

Part 1: SAP Mobile Development Kit (MDK) integration with SAP AI Core services – SetupPart 2: SAP Mobile Development Kit (MDK) integration with SAP AI Core services – Business Use CasesPart 3: SAP Mobile Development Kit (MDK) integration with SAP AI Core services – Measurement ReadingsPart 4: SAP MDK integration with SAP AI Core services – Anomaly Detection and Maintenance GuidelinesPart 5: SAP MDK integration with SAP AI Core services – Retrieval Augmented GenerationPart 6: SAP Mobile Development Kit (MDK) integration with SAP AI Core services – Work Order and Operation Recording (Current Blog)

In the previous blog, we showcased how to setup the SAP HANA Cloud vector engine and understand Retrieval Augmented Generation (RAG). In this blog, we will explore how to record orders and operations using voice and free text input.

SAP Mobile Development Kit (MDK) integration with SAP AI Core services – Work Order and Operation Recording

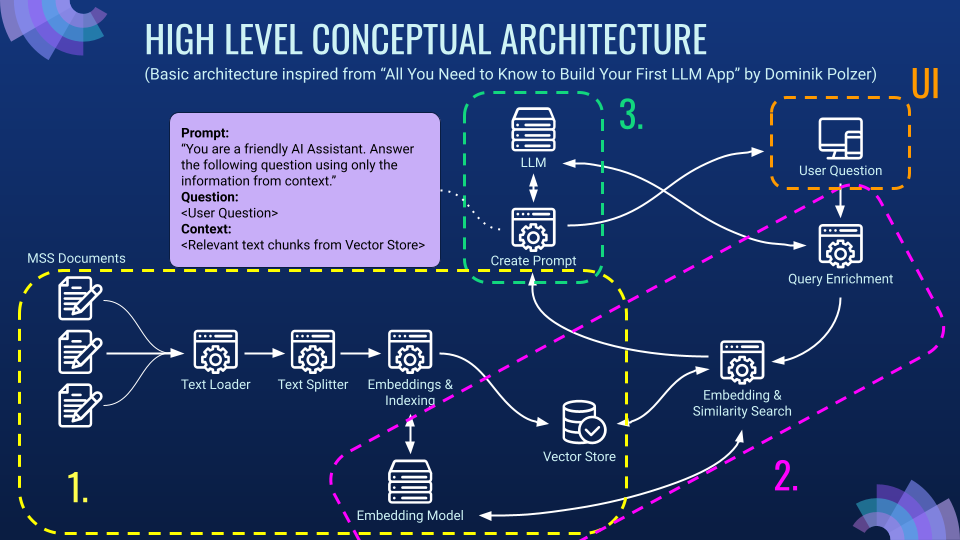

First, we will introduce how RAG is used in our use case. RAG is used during AI calls to generate specific form cells on the operation details page. For example, a water pump may have different details compared to a laptop. RAG ensures that the AI generates the appropriate operation details. Currently, the knowledge base, which we introduced in a preview blog, is limited to a few equipment types (e.g., water pumps, electricity meters) with very limited content. In a real case, there will be more equipment types, each having a large volume of information. In that case, we will benefit from the fast similarity searching capability of the SAP HANA Vector Database. In our blog, we will showcase a very simple example with the equipment schema as the only useful knowledge.

We will use a JavaScript function to retrieve the equipment schema from an instance of the HANA Vector Database. This schema includes both the common schema and specific schema. Remember the common schema and specific schema of the water pump as part of our domain specific knowledge, that we populated into the SAP HANA Cloud vector database in the previous blog? Let’s refresh our memory below.

Common Schema

[

{“Title”: “taskTitle”, “Description”: “Operation Title”, “Type”: “Text”},

{“Title”: “shortDescription”, “Description”: “Operation short description”, “Type”: “Text”},

{“Title”: “description”, “Description”: “Detailed operation description”, “Type”: “Text”},

{“Title”: “priority”, “Description”: “priority (high, medium, low)”, “Type”: “Text”},

]

Specific Schema for SAPPump:

[

{“Title”: “yearOfInstallation”, “Description”: “Year of installation”, “Type”: “Text”},

{“Title”: “length”, “Description”: “Length of the water pump in meters”, “Type”: “Text”}

]

Now we will use the JavaScript function below to retrieve the water pump equipment schema.

async function getEquipmentSchema(context, equipmentName) {

const functionName = ‘getRagResponse’;

const serviceName = ‘/MDKDevApp/Services/CAPVectorEngine.service’;

const parameters = {

“value”: `In JSON, Get the Common schema and the specific schema of the equipment ${equipmentName}. The common schema cannot be empty. The title of each property must be in camel cases. In camel case, you start a name with a small letter. If the name has multiple words, the later words will start with a capital letter`

};

let oFunction = { Name: functionName, Parameters: parameters };

try {

const response = await context.callFunction(serviceName, oFunction);

const responseObj = JSON.parse(response);

return responseObj.completion.content;

} catch (error) {

console.log(“Error:”, error.message);

}

return Promise.resolve(“”);

}

The above code snippet uses the MDK Client API’s `callFunction` method to call the OData function `getRagResponse` on the `CAPVectorEngine` OData service. It retrieves the common schema and the specific schema of the equipment in JSON format.

The returned result of equipment schema is as below

Equipment schema: {

“commonSchema”: {

“taskTitle”: “Text”,

“shortDescription”: “Text”,

“description”: “Text”,

“priority”: “Text”

},

“SAPPump”: {

“yearOfInstallation”: “Text”,

“length”: “Text”

}

}

Once the equipment schema is fetched, we will use it to generate a form cell

// Generate form cells

console.log(“Preparing form cells…”)

spinnerID = context.showActivityIndicator(‘Preparing forms…’);

const formCellSchema = await generateFormCells(context, equipmentSchema);

console.log(“Form cells schema:”, formCellSchema);

console.log(`Form cells prepared!`);

context.dismissActivityIndicator();

Here, we will call the `generateFormCells` function with the equipment schema fetched above as the input parameter.

async function generateFormCells(context, schema) {

const method = “POST”;

const path = “/chat/completions?api-version=2024-02-01”;

const body = {

messages: [

{

role: “user”,

content: `Generate form filling cells based on the schema provided as mentioned. ${schema}`

}

],

max_tokens: 256,

temperature: 0,

frequency_penalty: 0,

presence_penalty: 0,

functions: [{ name: “format_response”, parameters: formCellSchema }],

function_call: { name: “format_response” },

};

const headers = {

“content-type”: “application/json”,

“AI-Resource-Group”: “default”

};

const service = “/MDKDevApp/Services/AzureOpenAI.service”;

return context.executeAction({

“Name”: “/MDKDevApp/Actions/SendRequest.action”,

“Properties”: {

“ShowActivityIndicator”: false,

“Target”: {

“Path”: path,

“RequestProperties”: {

“Method”: method,

“Headers”: headers,

“Body”: JSON.stringify(body)

},

“Service”: service

}

}

}).then(response => {

const resultsObj = JSON.parse(response.data.choices[0].message.function_call.arguments);

return resultsObj;

});

}

Again, we will send a POST request to the SAP AI Core service via the AzureOpenAI destination, using the same AI Core service deployment as in previous blogs for anomaly detection and meter reading. The difference is that this time we will not upload an image; instead, we will use the equipment schema we obtained in the last step as the content.

content: `Generate form filling cells based on the schema provided as mentioned. ${schema}`

We also use an LLM function call to specify the structured output we want from the AI service. Please note that how to define an LLM function call may vary between different LLMs. This example is based on OpenAI GPT-4. For details, refer to OpenAI’s documentation (https://platform.openai.com/docs/guides/function-calling ). If you are using another LLM, consult the respective vendor’s documentation to understand how to make function calls or, more generally, how to connect LLMs to external tools.

functions: [{ name: “format_response”, parameters: formCellSchema }],

function_call: { name: “format_response” },

For our example, we make a simple function call to format the response into structured data by passing the `formCellSchema` below as an input parameter.

const formCellSchema = {

“type”: “object”,

“properties”: {

“commonSchema”: {

“type”: “array”,

“description”: “Refers to each item in the common schema array”,

“items”: {

“type”: “object”,

“properties”: {

“title”: {

“type”: “string”,

“description”: “Title of the property. Must be in camel case and match exactly as defined in schema”

},

“value”: {

“type”: “string”,

“description”: “Value of the property. Must be an empty string if it takes in a string/number. Must be false if it takes in a boolean. Otherwise, it must be an empty string”

},

“type”: {

“type”: “string”,

“description”: “If the schema object is a value of string or number, it will be ‘Control.Type.FormCell.SimpleProperty’. If it is a boolean it will be ‘Control.Type.FormCell.Switch’. Otherwise, it is a ‘Control.Type.FormCell.SegmentedControl'”

}

},

“required”: [“title”, “value”, “type”]

}

},

“specificSchema”: {

“type”: “array”,

“description”: “Refers to each item in the specific schema array”,

“items”: {

“type”: “object”,

“properties”: {

“title”: {

“type”: “string”,

“description”: “Title of the property. Must be in camel case and match exactly as defined in schema”

},

“value”: {

“type”: “string”,

“description”: “Value of the property. Must be an empty string if it takes in a string/number. Must be false if it takes in a boolean. Otherwise, it must be an empty string”

},

“type”: {

“type”: “string”,

“description”: “If the schema object is a value of string or number, it will be ‘Control.Type.FormCell.SimpleProperty’. If it is a boolean it will be ‘Control.Type.FormCell.Switch’. Otherwise, it is a ‘Control.Type.FormCell.SegmentedControl'”

}

},

“required”: [“title”, “value”, “type”]

}

}

},

“required”: [“commonSchema”, “specificSchema”]

};

The formCellSchema object defines the structure of a schema used to generate form cells. It consists of two main parts: commonSchema and specificSchema. Both are arrays of objects, each describing properties of form cells. One is commonSchema, which corresponds to the commonSchema of EquipmentSchema. The other is specificSchema, which corresponds to SAPPump of EquipmentSchema. EquipmentSchema is returned by getRagResponse from HANA vector database in previous step. With the above code, MDK metadata of two form cells will be generated by the AI core service. For our example, it will be something like below:

Form cells schema: {

“specificSchema”: [

{

“title”: “yearOfInstallation”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

},

{

“title”: “length”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

}

],

“commonSchema”: [

{

“title”: “taskTitle”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

},

{

“title”: “shortDescription”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

},

{

“title”: “description”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

},

{

“title”: “priority”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

}

]

}

Now we will save above metadata to a variable to use later.

const pageProxy = context.evaluateTargetPathForAPI(“#Page:MDKGenAIPage”);

const cd = pageProxy.getClientData();

if (!cd) { console.log(“Empty”); return; }

if (!cd.OperationsData) {

cd.OperationsData = [];

}

const schema = […formCellSchema.commonSchema, …formCellSchema.specificSchema]

task.schema = JSON.stringify(schema);

cd.OperationsData = […cd.OperationsData, …tasks];

`PageProxy` is a developer-facing interface that provides access to a page. It is passed to rules to enable application-specific customizations. Here, we use the `PageProxy` of the current MDK page `MDKGenAIPage` to store the above metadata.

Next, we will use above metadata to create a MDK page. Instead of creating page with static page metadata, we will use below Javascript rules to dynamically create page with above metadata.

import ParseKey from “./ParseKey”;

function getFormCellSchema(context) {

try {

const binding = context.binding;

const schema = JSON.parse(binding.OperationData?.schema);

const results = [];

schema.forEach(formcell => {

let value = formcell.value;

for (const key in binding.OperationData) {

if (binding.OperationData.hasOwnProperty(key) && formcell.title === key) {

value = binding.OperationData[key];

}

}

results.push({

“_Type”: formcell.type,

“Caption”: ParseKey(formcell.title),

“Value”: value,

“_Name”: formcell.title,

})

})

return results;

} catch (e) {

console.log(e)

}

}

export default function NavToOperationEdit(context) {

try {

const pageProxy = context.getPageProxy();

const schema = getFormCellSchema(context);

const pageMetataObj = {

“Caption”: “Operation Detail Edit”,

“ActionBar”: {

“Items”: [

{

“Position”: “right”,

“SystemItem”: “sparkles”,

“Text”: “Edit”,

“OnPress”: “/MDKDevApp/Rules/ImageAnalysis/NavToRecordPage.js”

},

{

“Position”: “right”,

“Text”: “Confirm”,

“OnPress”: “/MDKDevApp/Rules/ImageAnalysis/SaveData.js”

}

]

},

“Controls”: [

{

“Sections”: [

{

“_Type”: “Section.Type.FormCell”,

“_Name”: “FormCellSection”,

“Controls”: schema

}

],

“_Name”: “SectionedTable”,

“_Type”: “Control.Type.SectionedTable”

}

],

“_Name”: “OperationEdit”,

“_Type”: “Page”

}

const pageMetadata = JSON.stringify(pageMetataObj);

return pageProxy.executeAction({

“Name”: “/MDKDevApp/Actions/NavToPage.action”,

“Properties”: {

“PageToOpen”: “/MDKDevApp/Pages/Empty.page”,

“PageMetadata”: pageMetadata

}

});

} catch (e) {

console.log(e)

}

}

First, the getFormCellSchema function extracts the form cell schema from the PageProxy. Next, we will define the page metadata object pageMetadataObj. You may notice that in the FormCellSection, the value of Controls is actually the form cell schema from the PageProxy. Lastly, the metadata object pageMetadataObj will be stringified and used to replace the content of an empty page. In this way, a page like the screenshot below will be created dynamically.

Now, we are going to fill out the operation detail form to create a new work order for equipment maintenance. Instead of manually entering the required information, such as the year of installation and the length of the water pipe, we implement hands-free input using voice input from a mobile device. First, we use the NativeScript speech-recognition plugin to convert voice input to text. Simply add nativescript-speech-recognition to MDKProject.json:

{

“AppName”: “MDK GenAI”,

“AppVersion”: “1.0.0”,

“BundleID”: “com.sap.mdk.genai”,

“Externals”: [“nativescript-speech-recognition”],

“NSPlugins”: [“nativescript-speech-recognition”],

“UrlScheme”: “mdkclient”

}

Then, we can use this nativescript plugin in a Javascript rule as below

export default async function VoiceInput(context) {

let userInput = “”;

if (context.nativescript.platformModule.isIOS || context.nativescript.platformModule.isAndroid) {

// require the plugin

let SpeechRecognition = require(“nativescript-speech-recognition”).SpeechRecognition;

// instantiate the plugin

let speechRecognition = new SpeechRecognition();

let pageProxy;

let shouldUpdateNote = false;

try {

pageProxy = context.evaluateTargetPathForAPI(“#Page:SpeechAnalysisUseCasePage”);

} catch (err) {

pageProxy = context.evaluateTargetPathForAPI(“#Page:OperationDetail”);

shouldUpdateNote = true;

}

// save speech recognition object to stop listening

const cd = pageProxy.getClientData();

cd.SpeechRecognitionObj = speechRecognition;

try {

const available = await speechRecognition.available();

if (available) {

cd.isListening = true;

cd.recordingRestarted = true;

context.getParent().redraw();

const transcription = await speechRecognition.startListening(

{

// set to true to get results back continuously

returnPartialResults: true,

// this callback will be invoked repeatedly during recognition

onResult: (transcription) => {

UpdateProgress(context, transcription.text, shouldUpdateNote, cd);

userInput = transcription.text; // assign the result

// console.log(`User finished?: ${transcription.finished}`);

if (transcription.finished) {

DismissProgress(context, shouldUpdateNote);

return userInput;

}

},

onError: (error) => {

}

}

);

const pageProxy = context.getPageProxy();

const sectionProxy = pageProxy.evaluateTargetPathForAPI(“#Section:FormCellSection”);

const controlProxy = sectionProxy.getControl(‘NoteFormCell’);

controlProxy.setEditable(false);

}

} catch (error) {

console.log(`Error: ${error}`);

}

//for POC wait for 10 seconds to finish speech recognition

await sleep(10000).then(() => {

return Promise.resolve();

});

console.log(‘The voice input has finished!’);

// DismissProgress(clientAPI);

return userInput;

} else {

// voice input not support on web, use default input for now

return userInput;

}

}

With the above code snippet, voice input will be converted into text and stored in SpeechRecognitionObj. Above code can be embedded into a MDK modal page as below

{

“Caption”: “Use Voice or Free Text”,

“ActionBar”: {

“Items”: [

{

“Position”: “left”,

“SystemItem”: “Cancel”,

“OnPress”: “/MDKDevApp/Actions/ClosePage.action”

},

{

“Position”:”right”,

“Caption”: “Confirm”,

“OnPress”: “/MDKDevApp/Rules/ImageAnalysis/SubmitTextToEditForm.js”

}

]

},

“Controls”: [

{

“_Type”: “Control.Type.SectionedTable”,

“_Name”: “SectionedTable1”,

“Sections”: [

{

“ObjectHeader”: {

“Subhead”: “Guided questions: “,

“Description”: “/MDKDevApp/Rules/SpeechAnalysis/GetGuidedQuestions.js”

},

“Header”: {

“UseTopPadding”: false

},

“_Type”: “Section.Type.ObjectHeader”

},

{

“_Type”: “Section.Type.FormCell”,

“Header”: {

“UseTopPadding”: false

},

“Footer”: {

“UseBottomPadding”: false

},

“_Name”: “FormCellSection”,

“Controls”: [

{

“_Type”: “Control.Type.FormCell.Note”,

“_Name”: “NoteFormCell”,

“IsEditable”: true,

“Enabled”: true,

“MaxNumberOfLines”: 0,

“PlaceHolder”: “Your response to the question for form completion.”,

“validationProperties”: {

“ValidationMessage”: “Validation Message”,

“ValidationMessageColor”: “ff0000”,

“SeparatorBackgroundColor”: “000000”,

“SeparatorIsHidden”: false,

“ValidationViewBackgroundColor”: “fffa00”,

“ValidationViewIsHidden”: true,

“MaxNumberOfLines”: 2

}

}

]

},

{

“_Type”: “Section.Type.ButtonTable”,

“Separators”: {

“ControlSeparator”: false

},

“Header”: {

“UseTopPadding”: false

},

“Footer”: {

“UseBottomPadding”: false

},

“Layout”: {

“LayoutType”: “Horizontal”,

“HorizontalAlignment”: “Center”

},

“Buttons”: [

{

“ButtonType”: “Primary”,

“Title”: “Record”,

“Image”: “sap-icon://microphone”,

“OnPress”: “/MDKDevApp/Rules/SpeechAnalysis/StartRecording.js”,

“Visible”: “/MDKDevApp/Rules/ImageAnalysis/GetRecordControlVisibility.js”,

“_Name”: “Record”

},

{

“ButtonType”: “Primary”,

“Semantic”: “Negative”,

“Title”: “Stop”,

“Image”: “sap-icon://stop”,

“OnPress”: “/MDKDevApp/Rules/SpeechAnalysis/StopRecording.js”,

“Visible”: “/MDKDevApp/Rules/ImageAnalysis/GetRecordControlVisibility.js”,

“_Name”: “Stop”

}

]

}, {

“Header”: {

“UseTopPadding”: false

},

“_Type”: “Section.Type.Image”,

“_Name”: “PlaceholderForPadding”,

“Image”: “/MDKDevApp/Images/transparent.png”,

“Height”: 10

}

]

}

],

“_Name”: “OperationRecord”,

“_Type”: “Page”

}

The screenshot of this modal page is as below

You may have a question now: since the converted voice input is just plain text, such as “The water pump was installed in the year 2001, and the water pipe is 10 meters long,” it is unstructured data. How should we identify the useful information from the plain text, input it into the form, and submit it to the OData service to create a work order data record? That’s a good question. We will again use the AI Core service to achieve this.

We will call following Javascript function

async function convertSpeechIntoStucturedData(context, userInput, schema) {

const method = “POST”;

const path = “/chat/completions?api-version=2024-02-01”;

const body = {

messages: [

{

role: “system”,

content: `Convert the following speech to structure data as defined in ${schema}. The properties in the schema

are optional so if the speech does not contain the information on some property, do not include it.`

},

{

role: “user”,

content: userInput

}

],

max_tokens: 512,

temperature: 0,

frequency_penalty: 0,

presence_penalty: 0,

functions: [{ name: “format_response”, parameters: structuredDataSchema }],

function_call: { name: “format_response” },

};

const headers = {

“content-type”: “application/json”,

“AI-Resource-Group”: “default”

};

const service = “/MDKDevApp/Services/AzureOpenAI.service”;

console.log(“Converting natural language to structured data…”)

return context.executeAction({

“Name”: “/MDKDevApp/Actions/SendRequest.action”,

“Properties”: {

“Target”: {

“Path”: path,

“RequestProperties”: {

“Method”: method,

“Headers”: headers,

“Body”: JSON.stringify(body)

},

“Service”: service

}

},

“ActivityIndicatorText”: “Converting natural language to structured data…”,

}).then(response => {

const resultsObj = JSON.parse(response.data.choices[0].message.function_call.arguments);

console.log(“Structured data results:”, resultsObj);

console.log(“”);

return resultsObj;

});

By now, you should be very familiar with this kind of JavaScript function. We have repeatedly used it a few times for meter reading, anomaly detection, maintenance guideline generation, etc.

In the POST request body, the variable ${schema} is the same as the one we saved in the earlier step, the form cells schema. Similarly, as before, we also use an LLM function call format_response to specify the structured output we want from the AI core service. This format_response function has a parameter structuredDataSchema to specify the schema of the structured output we want.

const structuredDataSchema = {

“type”: “object”,

“properties”: {

“formCell”: {

“type”: “array”,

“description”: “Refers to each item in the schema array. The title property must completely match the one defined in schema”,

“items”: {

“type”: “object”,

“properties”: {

“title”: {

“type”: “string”,

“description”: “Title of the property”

},

“value”: {

“type”: “string”,

“description”: “Value of the property”

}

},

“required”: [“title”, “value”]

}

}

},

“required”: [“formCell”]

};

Thus, the AI core service will process the input “The water pump was installed in the year 2001, and the water pipe is 10 meters long,” extract the useful information, and adhere to the structuredDataSchema to output the structured data as shown below:

Structured data results: {

“formCell”: [

{

“title”: “yearOfInstallation”,

“value”: “2001”

},

{

“title”: “length”,

“value”: “10 meter”

}

]

}

The output structured data will be populated into the form cells accordingly and submitted to the OData service to create a new work order for equipment operation and maintenance.

To summarize, in this blog, we provide technical details and examples on how to use the SAP AI core service in an MDK app to convert natural language input into structured data with the help of Retrieval Augmented Generation and LLM functions. The structured data is then populated into form cells and submitted to the OData service to create a work order for equipment operation and maintenance.

This marks the temporary end of our series on SAP Mobile Development Kit (MDK) integration with SAP AI Core service. Thank you for joining me throughout this series. However, this is not the end, but just the beginning of our MDK AI journey. We will continue to update you with new AI-related features for MDK, so stay tuned!

In this series of blogs, I will showcase how to integrate SAP AI Core services into SAP Mobile Development Kit (MDK) to develop mobile applications with AI capabilities.Part 1: SAP Mobile Development Kit (MDK) integration with SAP AI Core services – SetupPart 2: SAP Mobile Development Kit (MDK) integration with SAP AI Core services – Business Use CasesPart 3: SAP Mobile Development Kit (MDK) integration with SAP AI Core services – Measurement ReadingsPart 4: SAP MDK integration with SAP AI Core services – Anomaly Detection and Maintenance GuidelinesPart 5: SAP MDK integration with SAP AI Core services – Retrieval Augmented GenerationPart 6: SAP Mobile Development Kit (MDK) integration with SAP AI Core services – Work Order and Operation Recording (Current Blog)In the previous blog, we showcased how to setup the SAP HANA Cloud vector engine and understand Retrieval Augmented Generation (RAG). In this blog, we will explore how to record orders and operations using voice and free text input.SAP Mobile Development Kit (MDK) integration with SAP AI Core services – Work Order and Operation Recording First, we will introduce how RAG is used in our use case. RAG is used during AI calls to generate specific form cells on the operation details page. For example, a water pump may have different details compared to a laptop. RAG ensures that the AI generates the appropriate operation details. Currently, the knowledge base, which we introduced in a preview blog, is limited to a few equipment types (e.g., water pumps, electricity meters) with very limited content. In a real case, there will be more equipment types, each having a large volume of information. In that case, we will benefit from the fast similarity searching capability of the SAP HANA Vector Database. In our blog, we will showcase a very simple example with the equipment schema as the only useful knowledge.We will use a JavaScript function to retrieve the equipment schema from an instance of the HANA Vector Database. This schema includes both the common schema and specific schema. Remember the common schema and specific schema of the water pump as part of our domain specific knowledge, that we populated into the SAP HANA Cloud vector database in the previous blog? Let’s refresh our memory below. Common Schema

[

{“Title”: “taskTitle”, “Description”: “Operation Title”, “Type”: “Text”},

{“Title”: “shortDescription”, “Description”: “Operation short description”, “Type”: “Text”},

{“Title”: “description”, “Description”: “Detailed operation description”, “Type”: “Text”},

{“Title”: “priority”, “Description”: “priority (high, medium, low)”, “Type”: “Text”},

]

Specific Schema for SAPPump:

[

{“Title”: “yearOfInstallation”, “Description”: “Year of installation”, “Type”: “Text”},

{“Title”: “length”, “Description”: “Length of the water pump in meters”, “Type”: “Text”}

] Now we will use the JavaScript function below to retrieve the water pump equipment schema. async function getEquipmentSchema(context, equipmentName) {

const functionName = ‘getRagResponse’;

const serviceName = ‘/MDKDevApp/Services/CAPVectorEngine.service’;

const parameters = {

“value”: `In JSON, Get the Common schema and the specific schema of the equipment ${equipmentName}. The common schema cannot be empty. The title of each property must be in camel cases. In camel case, you start a name with a small letter. If the name has multiple words, the later words will start with a capital letter`

};

let oFunction = { Name: functionName, Parameters: parameters };

try {

const response = await context.callFunction(serviceName, oFunction);

const responseObj = JSON.parse(response);

return responseObj.completion.content;

} catch (error) {

console.log(“Error:”, error.message);

}

return Promise.resolve(“”);

} The above code snippet uses the MDK Client API’s `callFunction` method to call the OData function `getRagResponse` on the `CAPVectorEngine` OData service. It retrieves the common schema and the specific schema of the equipment in JSON format.The returned result of equipment schema is as below Equipment schema: {

“commonSchema”: {

“taskTitle”: “Text”,

“shortDescription”: “Text”,

“description”: “Text”,

“priority”: “Text”

},

“SAPPump”: {

“yearOfInstallation”: “Text”,

“length”: “Text”

}

} Once the equipment schema is fetched, we will use it to generate a form cell // Generate form cells

console.log(“Preparing form cells…”)

spinnerID = context.showActivityIndicator(‘Preparing forms…’);

const formCellSchema = await generateFormCells(context, equipmentSchema);

console.log(“Form cells schema:”, formCellSchema);

console.log(`Form cells prepared!`);

context.dismissActivityIndicator(); Here, we will call the `generateFormCells` function with the equipment schema fetched above as the input parameter. async function generateFormCells(context, schema) {

const method = “POST”;

const path = “/chat/completions?api-version=2024-02-01”;

const body = {

messages: [

{

role: “user”,

content: `Generate form filling cells based on the schema provided as mentioned. ${schema}`

}

],

max_tokens: 256,

temperature: 0,

frequency_penalty: 0,

presence_penalty: 0,

functions: [{ name: “format_response”, parameters: formCellSchema }],

function_call: { name: “format_response” },

};

const headers = {

“content-type”: “application/json”,

“AI-Resource-Group”: “default”

};

const service = “/MDKDevApp/Services/AzureOpenAI.service”;

return context.executeAction({

“Name”: “/MDKDevApp/Actions/SendRequest.action”,

“Properties”: {

“ShowActivityIndicator”: false,

“Target”: {

“Path”: path,

“RequestProperties”: {

“Method”: method,

“Headers”: headers,

“Body”: JSON.stringify(body)

},

“Service”: service

}

}

}).then(response => {

const resultsObj = JSON.parse(response.data.choices[0].message.function_call.arguments);

return resultsObj;

});

} Again, we will send a POST request to the SAP AI Core service via the AzureOpenAI destination, using the same AI Core service deployment as in previous blogs for anomaly detection and meter reading. The difference is that this time we will not upload an image; instead, we will use the equipment schema we obtained in the last step as the content. content: `Generate form filling cells based on the schema provided as mentioned. ${schema}` We also use an LLM function call to specify the structured output we want from the AI service. Please note that how to define an LLM function call may vary between different LLMs. This example is based on OpenAI GPT-4. For details, refer to OpenAI’s documentation (https://platform.openai.com/docs/guides/function-calling ). If you are using another LLM, consult the respective vendor’s documentation to understand how to make function calls or, more generally, how to connect LLMs to external tools. functions: [{ name: “format_response”, parameters: formCellSchema }],

function_call: { name: “format_response” }, For our example, we make a simple function call to format the response into structured data by passing the `formCellSchema` below as an input parameter. const formCellSchema = {

“type”: “object”,

“properties”: {

“commonSchema”: {

“type”: “array”,

“description”: “Refers to each item in the common schema array”,

“items”: {

“type”: “object”,

“properties”: {

“title”: {

“type”: “string”,

“description”: “Title of the property. Must be in camel case and match exactly as defined in schema”

},

“value”: {

“type”: “string”,

“description”: “Value of the property. Must be an empty string if it takes in a string/number. Must be false if it takes in a boolean. Otherwise, it must be an empty string”

},

“type”: {

“type”: “string”,

“description”: “If the schema object is a value of string or number, it will be ‘Control.Type.FormCell.SimpleProperty’. If it is a boolean it will be ‘Control.Type.FormCell.Switch’. Otherwise, it is a ‘Control.Type.FormCell.SegmentedControl'”

}

},

“required”: [“title”, “value”, “type”]

}

},

“specificSchema”: {

“type”: “array”,

“description”: “Refers to each item in the specific schema array”,

“items”: {

“type”: “object”,

“properties”: {

“title”: {

“type”: “string”,

“description”: “Title of the property. Must be in camel case and match exactly as defined in schema”

},

“value”: {

“type”: “string”,

“description”: “Value of the property. Must be an empty string if it takes in a string/number. Must be false if it takes in a boolean. Otherwise, it must be an empty string”

},

“type”: {

“type”: “string”,

“description”: “If the schema object is a value of string or number, it will be ‘Control.Type.FormCell.SimpleProperty’. If it is a boolean it will be ‘Control.Type.FormCell.Switch’. Otherwise, it is a ‘Control.Type.FormCell.SegmentedControl'”

}

},

“required”: [“title”, “value”, “type”]

}

}

},

“required”: [“commonSchema”, “specificSchema”]

}; The formCellSchema object defines the structure of a schema used to generate form cells. It consists of two main parts: commonSchema and specificSchema. Both are arrays of objects, each describing properties of form cells. One is commonSchema, which corresponds to the commonSchema of EquipmentSchema. The other is specificSchema, which corresponds to SAPPump of EquipmentSchema. EquipmentSchema is returned by getRagResponse from HANA vector database in previous step. With the above code, MDK metadata of two form cells will be generated by the AI core service. For our example, it will be something like below: Form cells schema: {

“specificSchema”: [

{

“title”: “yearOfInstallation”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

},

{

“title”: “length”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

}

],

“commonSchema”: [

{

“title”: “taskTitle”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

},

{

“title”: “shortDescription”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

},

{

“title”: “description”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

},

{

“title”: “priority”,

“value”: “”,

“type”: “Control.Type.FormCell.SimpleProperty”

}

]

} Now we will save above metadata to a variable to use later. const pageProxy = context.evaluateTargetPathForAPI(“#Page:MDKGenAIPage”);

const cd = pageProxy.getClientData();

if (!cd) { console.log(“Empty”); return; }

if (!cd.OperationsData) {

cd.OperationsData = [];

}

const schema = […formCellSchema.commonSchema, …formCellSchema.specificSchema]

task.schema = JSON.stringify(schema);

cd.OperationsData = […cd.OperationsData, …tasks]; `PageProxy` is a developer-facing interface that provides access to a page. It is passed to rules to enable application-specific customizations. Here, we use the `PageProxy` of the current MDK page `MDKGenAIPage` to store the above metadata.Next, we will use above metadata to create a MDK page. Instead of creating page with static page metadata, we will use below Javascript rules to dynamically create page with above metadata. import ParseKey from “./ParseKey”;

function getFormCellSchema(context) {

try {

const binding = context.binding;

const schema = JSON.parse(binding.OperationData?.schema);

const results = [];

schema.forEach(formcell => {

let value = formcell.value;

for (const key in binding.OperationData) {

if (binding.OperationData.hasOwnProperty(key) && formcell.title === key) {

value = binding.OperationData[key];

}

}

results.push({

“_Type”: formcell.type,

“Caption”: ParseKey(formcell.title),

“Value”: value,

“_Name”: formcell.title,

})

})

return results;

} catch (e) {

console.log(e)

}

}

export default function NavToOperationEdit(context) {

try {

const pageProxy = context.getPageProxy();

const schema = getFormCellSchema(context);

const pageMetataObj = {

“Caption”: “Operation Detail Edit”,

“ActionBar”: {

“Items”: [

{

“Position”: “right”,

“SystemItem”: “sparkles”,

“Text”: “Edit”,

“OnPress”: “/MDKDevApp/Rules/ImageAnalysis/NavToRecordPage.js”

},

{

“Position”: “right”,

“Text”: “Confirm”,

“OnPress”: “/MDKDevApp/Rules/ImageAnalysis/SaveData.js”

}

]

},

“Controls”: [

{

“Sections”: [

{

“_Type”: “Section.Type.FormCell”,

“_Name”: “FormCellSection”,

“Controls”: schema

}

],

“_Name”: “SectionedTable”,

“_Type”: “Control.Type.SectionedTable”

}

],

“_Name”: “OperationEdit”,

“_Type”: “Page”

}

const pageMetadata = JSON.stringify(pageMetataObj);

return pageProxy.executeAction({

“Name”: “/MDKDevApp/Actions/NavToPage.action”,

“Properties”: {

“PageToOpen”: “/MDKDevApp/Pages/Empty.page”,

“PageMetadata”: pageMetadata

}

});

} catch (e) {

console.log(e)

}

} First, the getFormCellSchema function extracts the form cell schema from the PageProxy. Next, we will define the page metadata object pageMetadataObj. You may notice that in the FormCellSection, the value of Controls is actually the form cell schema from the PageProxy. Lastly, the metadata object pageMetadataObj will be stringified and used to replace the content of an empty page. In this way, a page like the screenshot below will be created dynamically.Now, we are going to fill out the operation detail form to create a new work order for equipment maintenance. Instead of manually entering the required information, such as the year of installation and the length of the water pipe, we implement hands-free input using voice input from a mobile device. First, we use the NativeScript speech-recognition plugin to convert voice input to text. Simply add nativescript-speech-recognition to MDKProject.json: {

“AppName”: “MDK GenAI”,

“AppVersion”: “1.0.0”,

“BundleID”: “com.sap.mdk.genai”,

“Externals”: [“nativescript-speech-recognition”],

“NSPlugins”: [“nativescript-speech-recognition”],

“UrlScheme”: “mdkclient”

}

Then, we can use this nativescript plugin in a Javascript rule as below

export default async function VoiceInput(context) {

let userInput = “”;

if (context.nativescript.platformModule.isIOS || context.nativescript.platformModule.isAndroid) {

// require the plugin

let SpeechRecognition = require(“nativescript-speech-recognition”).SpeechRecognition;

// instantiate the plugin

let speechRecognition = new SpeechRecognition();

let pageProxy;

let shouldUpdateNote = false;

try {

pageProxy = context.evaluateTargetPathForAPI(“#Page:SpeechAnalysisUseCasePage”);

} catch (err) {

pageProxy = context.evaluateTargetPathForAPI(“#Page:OperationDetail”);

shouldUpdateNote = true;

}

// save speech recognition object to stop listening

const cd = pageProxy.getClientData();

cd.SpeechRecognitionObj = speechRecognition;

try {

const available = await speechRecognition.available();

if (available) {

cd.isListening = true;

cd.recordingRestarted = true;

context.getParent().redraw();

const transcription = await speechRecognition.startListening(

{

// set to true to get results back continuously

returnPartialResults: true,

// this callback will be invoked repeatedly during recognition

onResult: (transcription) => {

UpdateProgress(context, transcription.text, shouldUpdateNote, cd);

userInput = transcription.text; // assign the result

// console.log(`User finished?: ${transcription.finished}`);

if (transcription.finished) {

DismissProgress(context, shouldUpdateNote);

return userInput;

}

},

onError: (error) => {

}

}

);

const pageProxy = context.getPageProxy();

const sectionProxy = pageProxy.evaluateTargetPathForAPI(“#Section:FormCellSection”);

const controlProxy = sectionProxy.getControl(‘NoteFormCell’);

controlProxy.setEditable(false);

}

} catch (error) {

console.log(`Error: ${error}`);

}

//for POC wait for 10 seconds to finish speech recognition

await sleep(10000).then(() => {

return Promise.resolve();

});

console.log(‘The voice input has finished!’);

// DismissProgress(clientAPI);

return userInput;

} else {

// voice input not support on web, use default input for now

return userInput;

}

} With the above code snippet, voice input will be converted into text and stored in SpeechRecognitionObj. Above code can be embedded into a MDK modal page as below {

“Caption”: “Use Voice or Free Text”,

“ActionBar”: {

“Items”: [

{

“Position”: “left”,

“SystemItem”: “Cancel”,

“OnPress”: “/MDKDevApp/Actions/ClosePage.action”

},

{

“Position”:”right”,

“Caption”: “Confirm”,

“OnPress”: “/MDKDevApp/Rules/ImageAnalysis/SubmitTextToEditForm.js”

}

]

},

“Controls”: [

{

“_Type”: “Control.Type.SectionedTable”,

“_Name”: “SectionedTable1”,

“Sections”: [

{

“ObjectHeader”: {

“Subhead”: “Guided questions: “,

“Description”: “/MDKDevApp/Rules/SpeechAnalysis/GetGuidedQuestions.js”

},

“Header”: {

“UseTopPadding”: false

},

“_Type”: “Section.Type.ObjectHeader”

},

{

“_Type”: “Section.Type.FormCell”,

“Header”: {

“UseTopPadding”: false

},

“Footer”: {

“UseBottomPadding”: false

},

“_Name”: “FormCellSection”,

“Controls”: [

{

“_Type”: “Control.Type.FormCell.Note”,

“_Name”: “NoteFormCell”,

“IsEditable”: true,

“Enabled”: true,

“MaxNumberOfLines”: 0,

“PlaceHolder”: “Your response to the question for form completion.”,

“validationProperties”: {

“ValidationMessage”: “Validation Message”,

“ValidationMessageColor”: “ff0000”,

“SeparatorBackgroundColor”: “000000”,

“SeparatorIsHidden”: false,

“ValidationViewBackgroundColor”: “fffa00”,

“ValidationViewIsHidden”: true,

“MaxNumberOfLines”: 2

}

}

]

},

{

“_Type”: “Section.Type.ButtonTable”,

“Separators”: {

“ControlSeparator”: false

},

“Header”: {

“UseTopPadding”: false

},

“Footer”: {

“UseBottomPadding”: false

},

“Layout”: {

“LayoutType”: “Horizontal”,

“HorizontalAlignment”: “Center”

},

“Buttons”: [

{

“ButtonType”: “Primary”,

“Title”: “Record”,

“Image”: “sap-icon://microphone”,

“OnPress”: “/MDKDevApp/Rules/SpeechAnalysis/StartRecording.js”,

“Visible”: “/MDKDevApp/Rules/ImageAnalysis/GetRecordControlVisibility.js”,

“_Name”: “Record”

},

{

“ButtonType”: “Primary”,

“Semantic”: “Negative”,

“Title”: “Stop”,

“Image”: “sap-icon://stop”,

“OnPress”: “/MDKDevApp/Rules/SpeechAnalysis/StopRecording.js”,

“Visible”: “/MDKDevApp/Rules/ImageAnalysis/GetRecordControlVisibility.js”,

“_Name”: “Stop”

}

]

}, {

“Header”: {

“UseTopPadding”: false

},

“_Type”: “Section.Type.Image”,

“_Name”: “PlaceholderForPadding”,

“Image”: “/MDKDevApp/Images/transparent.png”,

“Height”: 10

}

]

}

],

“_Name”: “OperationRecord”,

“_Type”: “Page”

} The screenshot of this modal page is as belowYou may have a question now: since the converted voice input is just plain text, such as “The water pump was installed in the year 2001, and the water pipe is 10 meters long,” it is unstructured data. How should we identify the useful information from the plain text, input it into the form, and submit it to the OData service to create a work order data record? That’s a good question. We will again use the AI Core service to achieve this.We will call following Javascript function async function convertSpeechIntoStucturedData(context, userInput, schema) {

const method = “POST”;

const path = “/chat/completions?api-version=2024-02-01”;

const body = {

messages: [

{

role: “system”,

content: `Convert the following speech to structure data as defined in ${schema}. The properties in the schema

are optional so if the speech does not contain the information on some property, do not include it.`

},

{

role: “user”,

content: userInput

}

],

max_tokens: 512,

temperature: 0,

frequency_penalty: 0,

presence_penalty: 0,

functions: [{ name: “format_response”, parameters: structuredDataSchema }],

function_call: { name: “format_response” },

};

const headers = {

“content-type”: “application/json”,

“AI-Resource-Group”: “default”

};

const service = “/MDKDevApp/Services/AzureOpenAI.service”;

console.log(“Converting natural language to structured data…”)

return context.executeAction({

“Name”: “/MDKDevApp/Actions/SendRequest.action”,

“Properties”: {

“Target”: {

“Path”: path,

“RequestProperties”: {

“Method”: method,

“Headers”: headers,

“Body”: JSON.stringify(body)

},

“Service”: service

}

},

“ActivityIndicatorText”: “Converting natural language to structured data…”,

}).then(response => {

const resultsObj = JSON.parse(response.data.choices[0].message.function_call.arguments);

console.log(“Structured data results:”, resultsObj);

console.log(“”);

return resultsObj;

}); By now, you should be very familiar with this kind of JavaScript function. We have repeatedly used it a few times for meter reading, anomaly detection, maintenance guideline generation, etc.In the POST request body, the variable ${schema} is the same as the one we saved in the earlier step, the form cells schema. Similarly, as before, we also use an LLM function call format_response to specify the structured output we want from the AI core service. This format_response function has a parameter structuredDataSchema to specify the schema of the structured output we want. const structuredDataSchema = {

“type”: “object”,

“properties”: {

“formCell”: {

“type”: “array”,

“description”: “Refers to each item in the schema array. The title property must completely match the one defined in schema”,

“items”: {

“type”: “object”,

“properties”: {

“title”: {

“type”: “string”,

“description”: “Title of the property”

},

“value”: {

“type”: “string”,

“description”: “Value of the property”

}

},

“required”: [“title”, “value”]

}

}

},

“required”: [“formCell”]

}; Thus, the AI core service will process the input “The water pump was installed in the year 2001, and the water pipe is 10 meters long,” extract the useful information, and adhere to the structuredDataSchema to output the structured data as shown below: Structured data results: {

“formCell”: [

{

“title”: “yearOfInstallation”,

“value”: “2001”

},

{

“title”: “length”,

“value”: “10 meter”

}

]

} The output structured data will be populated into the form cells accordingly and submitted to the OData service to create a new work order for equipment operation and maintenance.To summarize, in this blog, we provide technical details and examples on how to use the SAP AI core service in an MDK app to convert natural language input into structured data with the help of Retrieval Augmented Generation and LLM functions. The structured data is then populated into form cells and submitted to the OData service to create a work order for equipment operation and maintenance.This marks the temporary end of our series on SAP Mobile Development Kit (MDK) integration with SAP AI Core service. Thank you for joining me throughout this series. However, this is not the end, but just the beginning of our MDK AI journey. We will continue to update you with new AI-related features for MDK, so stay tuned! Read More Technology Blogs by SAP articles

#SAP

#SAPTechnologyblog