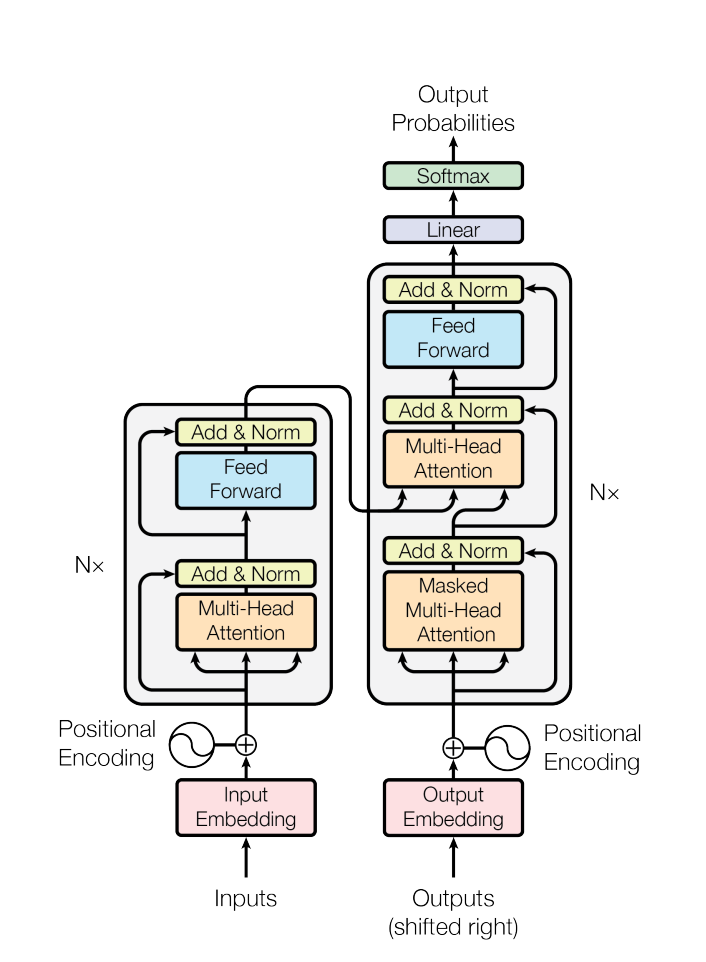

Self attention mechanism serve as the cornerstone of every Large Language Model that is created using the transformers architecture. In…

Self attention mechanism serve as the cornerstone of every Large Language Model that is created using the transformers architecture. In…Continue reading on Medium » Read More Llm on Medium

#AI