Post Content

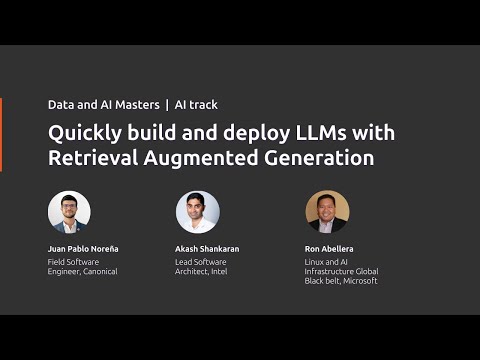

Retrieval-augmented generation (RAG) offers a new way to maximise the capabilities of large language models (LLMs) to produce more accurate, context-aware, and informative responses. Join Akash Shankaran (Intel), Ron Abellera (Microsoft), and Juan Pablo Norena (Canonical) for a tutorial on how RAG can enhance your LLMs.

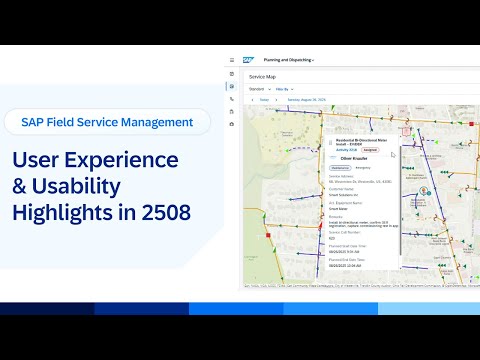

We will also explore optimising LLMs with RAG using Charmed OpenSearch, which can serve multiple services like data ingestion, model ingestion, vector database, retrieval and ranking, and LLM connector. We will also show the architecture of our RAG deployment in Microsoft® Azure Cloud. In addition, the RAG’s vector search capabilities are using Intel AVX® Acceleration, which enables faster and high-throughput generation of the RAG process. Learn more at https://canonical.com/solutions/ai and https://canonical.com/data Read More Canonical Ubuntu

#linux