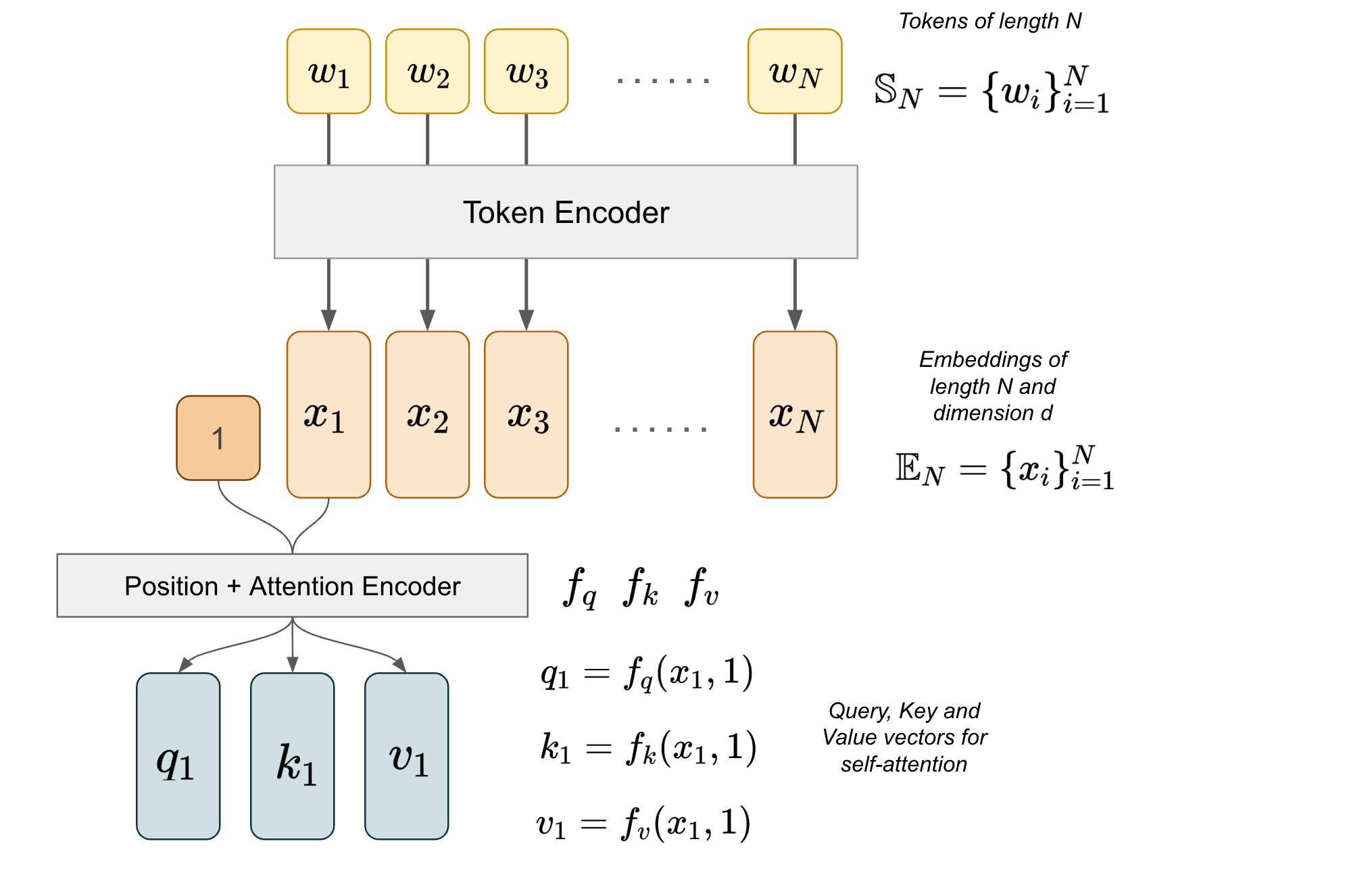

Attention-based models, such as large language models, apply attention layers throughout their building blocks. Since attention layers…

Attention-based models, such as large language models, apply attention layers throughout their building blocks. Since attention layers…Continue reading on Medium » Read More Llm on Medium

#AI