Post Content

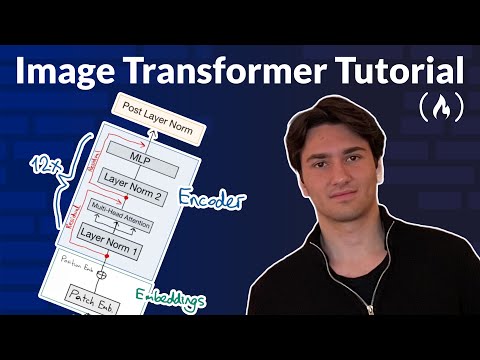

Vision Transformers (ViTs) are reshaping computer vision by bringing the power of self-attention to image processing. In this tutorial you will learn how to build a Vision Transformer from scratch. By the end of the course, you’ll have a deeper understanding of how AI models process visual data.

Course developed by @tungabayrak9765.

💻 Code: https://colab.research.google.com/drive/1Q6bfCG5UZ7ypBWft9auptcD4Pz5zQQQb?usp=sharing#scrollTo=1EaWO-aNOk3v

⭐️ Contents ⭐️

(0:00:00) Intro to Vision Transformer

(0:03:48) CLIP Model

(0:08:16) SigLIP vs CLIP

(0:12:09) Image Preprocessing

(0:15:32) Patch Embeddings

(0:20:48) Position Embeddings

(0:23:51) Embeddings Visualization

(0:26:11) Embeddings Implementation

(0:32:03) Multi-Head Attention

(0:46:19) MLP Layers

(0:49:18) Assembling the Full Vision Transformer

(0:59:36) Recap

❤️ Support for this channel comes from our friends at Scrimba – the coding platform that’s reinvented interactive learning: https://scrimba.com/freecodecamp

🎉 Thanks to our Champion and Sponsor supporters:

👾 Drake Milly

👾 Ulises Moralez

👾 Goddard Tan

👾 David MG

👾 Matthew Springman

👾 Claudio

👾 Oscar R.

👾 jedi-or-sith

👾 Nattira Maneerat

👾 Justin Hual

—

Learn to code for free and get a developer job: https://www.freecodecamp.org

Read hundreds of articles on programming: https://freecodecamp.org/news Read More freeCodeCamp.org

#programming #freecodecamp #learn #learncode #learncoding