Post Content

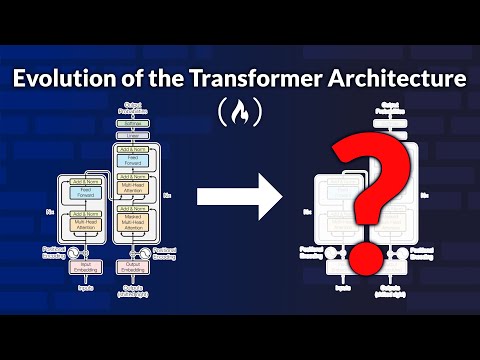

This course introduces the latest advancements that have enhanced the accuracy, efficiency, and scalability of Transformers. It is tailored for beginners and follows a step-by-step teaching approach.

In this course, you’ll explore:

– Various techniques for encoding positional information

– Different types of attention mechanisms

– Normalization methods and their optimal placement

– Commonly used activation functions

– And much more

You can find the slides, notebook, and scripts in this GitHub repository:

https://github.com/ImadSaddik/Train_Your_Language_Model_Course

Watch the previous course on LLMs mentioned in the introduction:

https://www.youtube.com/watch?v=9Ge0sMm65jo

To connect with Imad Saddik, check out his social accounts:

YouTube: @3CodeCampers

LinkedIn: /imadsaddik

Discord: imad_saddik

⭐️ Course Contents ⭐️

(0:00:00) Course Overview

(0:03:24) Introduction

(0:05:13) Positional Encoding

(1:02:23) Attention Mechanisms

(2:18:04) Small Refinements

(2:42:19) Putting Everything Together

(2:47:47) Conclusion

❤️ Support for this channel comes from our friends at Scrimba – the coding platform that’s reinvented interactive learning: https://scrimba.com/freecodecamp

🎉 Thanks to our Champion and Sponsor supporters:

👾 Drake Milly

👾 Ulises Moralez

👾 Goddard Tan

👾 David MG

👾 Matthew Springman

👾 Claudio

👾 Oscar R.

👾 jedi-or-sith

👾 Nattira Maneerat

👾 Justin Hual

—

Learn to code for free and get a developer job: https://www.freecodecamp.org

Read hundreds of articles on programming: https://freecodecamp.org/news Read More freeCodeCamp.org

#programming #freecodecamp #learn #learncode #learncoding

![Tips from a 20-year developer veteran turned consultancy founder – Tapas Adhikary [Podcast #206]](https://i4.ytimg.com/vi/GAZkxw6DJJE/hqdefault.jpg)