Post Content

Deciphering GPT-OSS Performance: Why Inference Setup Matters

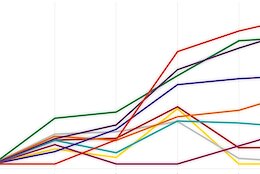

We explore the performance variability across different API providers and benchmark tests, highlighting significant discrepancies in speed, cost, and intelligence.

https://artificialanalysis.ai/models/gpt-oss-120b/providers#evaluations

https://x.com/ArtificialAnlys/status/1955102409044398415

https://x.com/DKundel/status/1955035810388119820

https://x.com/petergostev/status/1955764534490083813/photo/1

https://x.com/ClementDelangue/status/1953119901649891367

https://x.com/lmarena_ai/status/1955669431742587275

https://cookbook.openai.com/articles/openai-harmony

https://x.com/ggerganov/status/1953137378232615031

https://openrouter.ai/openai/gpt-oss-120b/providers

Website: https://engineerprompt.ai/

RAG Beyond Basics Course:

https://prompt-s-site.thinkific.com/courses/rag

Let’s Connect:

🦾 Discord: https://discord.com/invite/t4eYQRUcXB

☕ Buy me a Coffee: https://ko-fi.com/promptengineering

|🔴 Patreon: https://www.patreon.com/PromptEngineering

💼Consulting: https://calendly.com/engineerprompt/consulting-call

📧 Business Contact: engineerprompt@gmail.com

Become Member: http://tinyurl.com/y5h28s6h

💻 Pre-configured localGPT VM: https://bit.ly/localGPT (use Code: PromptEngineering for 50% off).

Signup for Newsletter, localgpt:

https://tally.so/r/3y9bb0

00:00 Introduction to Inference Challenges

00:47 Benchmarking API Providers

03:40 Performance Variations Explained

06:57 Local Hosting Solutions

08:46 Conclusion and Final Thoughts Read More Prompt Engineering

#AI #promptengineering