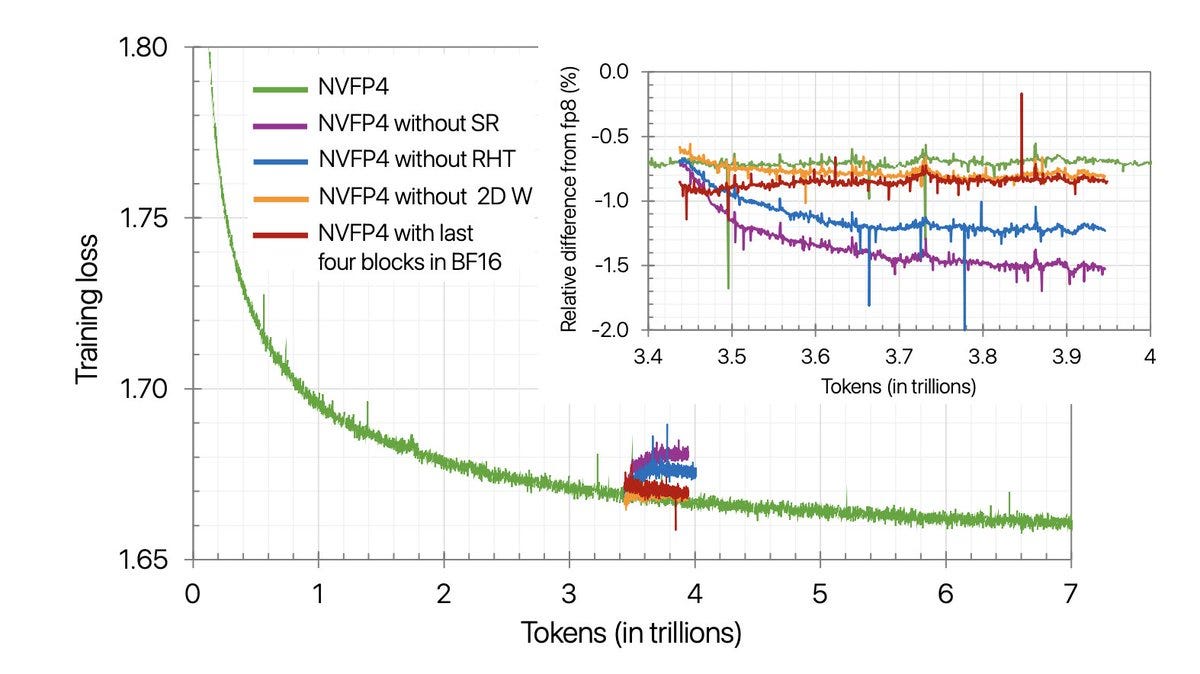

Okay, this one is wild. NVIDIA just trained a 12-billion-parameter language model on 10 trillion tokens — using only 4-bit precision. Yeah…

Okay, this one is wild. NVIDIA just trained a 12-billion-parameter language model on 10 trillion tokens — using only 4-bit precision. Yeah…Continue reading on Coding Nexus » Read More Llm on Medium

#AI