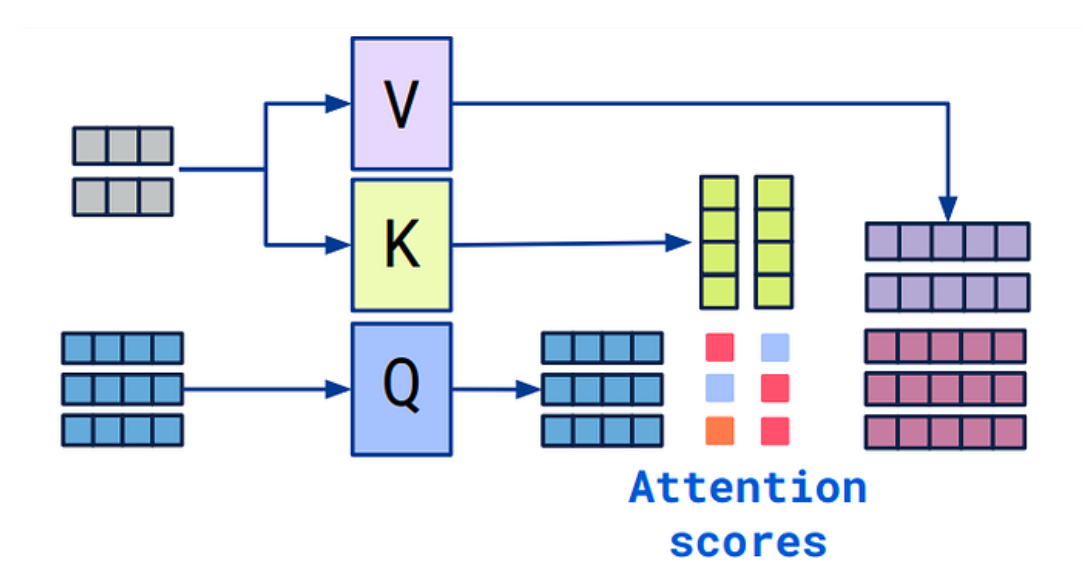

LLMs have taken over the base for AGI, as OpenAI says, So Attention is everywhere, everything all at once.

LLMs have taken over the base for AGI, as OpenAI says, So Attention is everywhere, everything all at once.Continue reading on Medium » Read More Llm on Medium

#AI

+ There are no comments

Add yours