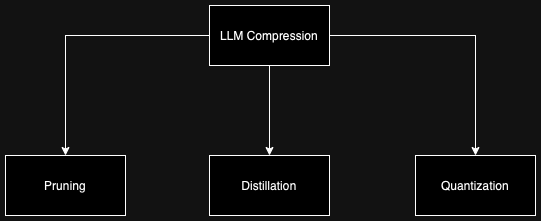

Efficient Deployment of Large Language Models through Quantization, Pruning, Distillation compression Techniques.

Efficient Deployment of Large Language Models through Quantization, Pruning, Distillation compression Techniques.Continue reading on Medium » Read More Llm on Medium

#AI

+ There are no comments

Add yours