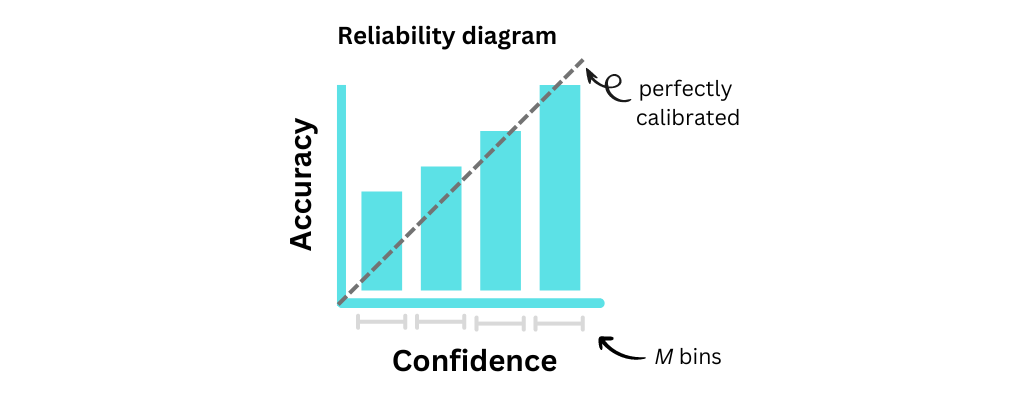

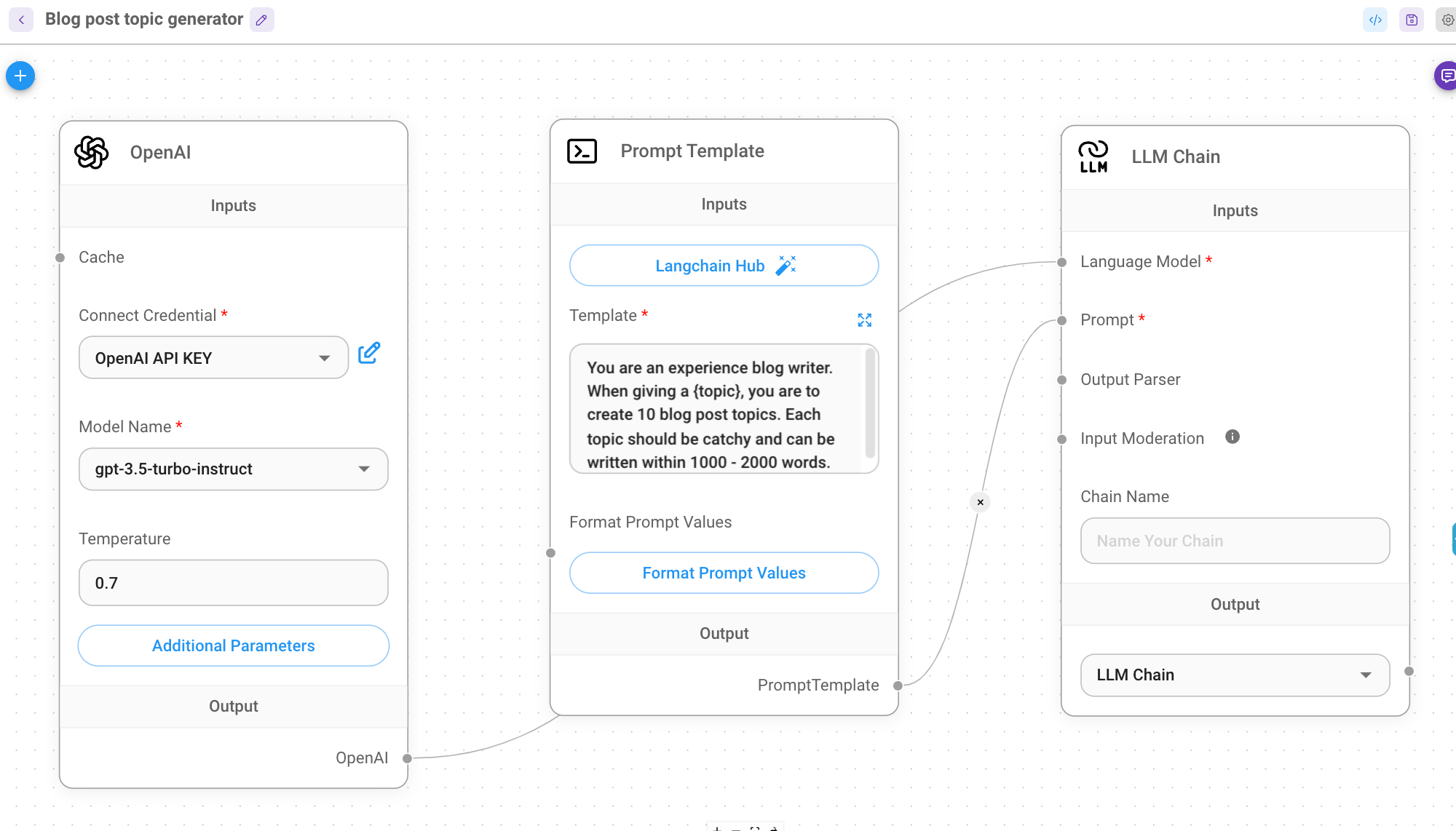

When you use OpenAI’s GPT through the API, you can get `logprobs` for each token in the output, which stands for log-probabilities. In…

When you use OpenAI’s GPT through the API, you can get `logprobs` for each token in the output, which stands for log-probabilities. In…Continue reading on Medium » Read More Llm on Medium

#AI

// Nürburgring

// Nürburgring

+ There are no comments

Add yours