Document Information Extraction – Premium

With the General Availability (GA) of Document Information Extraction, premium edition (DOX Premium), users now have the power to leverage Generative AI (GenAI) for extracting entities from structured documents. This cutting-edge capability allows for processing a wide variety of document types, in almost every language. However, as with most GenAI-driven applications, the success of the extraction process heavily depends on the quality of the prompts given to the model.

This blog post explores best practices for using GenAI in document extraction, focusing on optimizing performance through effective prompt engineering, refining field descriptions, and ensuring continuous improvement. By applying these insights, you can maximize the potential of GenAI-driven document processing in your organization.

The process of iterative prompt development

When working with large language models, it’s essential to understand that the first query is rarely perfect. For each field requiring extraction, we begin by writing a base prompt that captures essential information (such as position, format, or context) about the entity. Once we review the results, we often need to refine the prompt based on the initial output.

However, a word of caution: modifying the description of one field can impact the performance of other fields. Therefore, in DOX Premium, any adjustments to field descriptions should be followed by an analysis of all fields, not just those that were changed. This iterative process continues until we achieve satisfactory results.

Steps for Iterative Prompt Development:

Identify the field: Decide which field or specific extraction requires adjustment.Write or refine the prompt: Create a clear, specific base description.Review the results: Analyze the output for accuracy.Perform error analysis: Identify mistakes and discrepancies.Repeat: Iterate until the desired result is achieved.

Best Practices

Leverage Default Extractors

Before diving into custom prompt development, take advantage of the default extractors provided by DOX Premium. These extractors are designed to select the best possible model for any given scenario—whether it’s one of our own pre-trained models or a GenAI model. Trained on hundreds of thousands of business documents, they are highly accurate for common fields and document types, making them a valuable resource for initial extraction efforts. It’s beneficial to check the performance of these pre-trained models, especially when the language is supported by them.

Activating these default extractors before relying on generative AI can help you assess whether further customization is needed. In many cases, combining pre-trained models with generative AI yields optimal results for specific use cases. Here’s a quick guide on how to activate the default extractors:

Clear and concise field descriptions

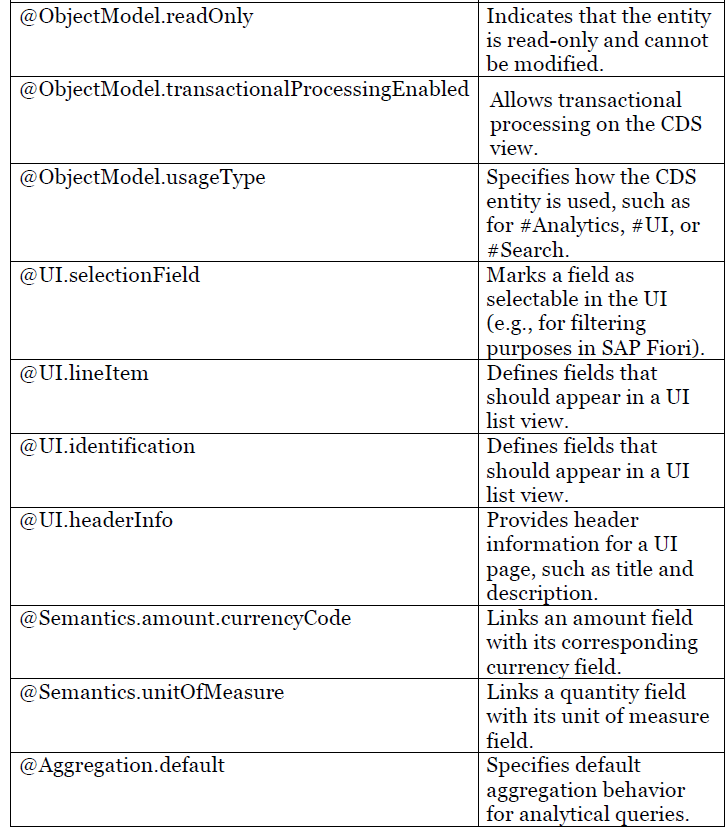

Using clear and concise language to describe fields as part of a prompt significantly improves extraction accuracy in DOX Premium. Effective field descriptions help the model understand the specific data to extract, reducing ambiguity and errors. This is particularly important when similar fields exist, and you want to guide the model in a specific direction.

Field descriptions can also be tailored to manipulate the output format or language. In the following example from a delivery note, we compare a basic prompt with a more guided approach that provides clearer instructions.

Don’tDoExtract the countryExtract the country from the address. Please translate the country into english.Extract the delivery conditionsExtract the delivery conditions. It is sufficient to extract only the abbreviation of the delivery condition mentioned on the document Material referenceMaterial Description

Extraction beforeExtraction after

In the example, we clarified the country extraction and achieved the desired outcome of translating the country name into English. For delivery conditions, specifying that only the abbreviation should be extracted helped produce a more accurate result. The last example highlights that even semantically close descriptions can generate different outputs.

Not all business documents follow such a clear, labeled structure. For instance, many purchase orders include material numbers or codes that may not have explicit labels or indicators on the document. In these cases, understanding the entities—such as recognizing a specific format or consistent positioning on the document—becomes essential. Once this knowledge is gathered, you can improve extraction by incorporating these specifics into the field descriptions.

Schema description

DOX Premium now allows you to define schema descriptions, which serve as general instructions to the large language model (LLM) before the schema and field-specific descriptions are applied. These schema descriptions can enhance the extraction process at the document level without focusing on individual fields.

One effective approach is to role-play with the LLM by assigning it a persona. For example:

“You are an expert in extracting purchase orders from company X, which are related to products Y.”

This instruction can be followed by more general directives, such as specifying language preferences or emphasizing certain priorities. Schema descriptions can also help clarify correlations between fields. For instance, you can instruct the LLM to differentiate between the sending and receiving entities:

“Company X is always the receiver/supplier of the purchase order.”

In the screenshot example, we demonstrate a schema description being used to guide the model’s understanding of a nested structure within a delivery note. In this case, the line item table contains multiple material codes that may be linked to each other. The schema description informs the LLM to treat all nested material codes as part of a single line item:

“The material codes in the line item table can have a nested structure, meaning that subsequent codes can belong to each other. Please extract all the nested material codes as one line item.”

Extraction of nested structure with schema description

This approach helps the model accurately interpret and extract data even from complex or hierarchical document structures.

Regular Expressions

If you want to extract numbers that have a standardised format, it can help to use regular expressions for that in the field description. Regular expressions are like a smart search tool that helps you find specific patterns or sequences of text in a large amount of data. For example, if you are pretty sure that the article number on purchase orders has a structure of ‘xxx.xx.xx’, where x is a digit between 0 and 0, you can use the following regular expressions: d{3}.d{2}.d{2}. As most of the DOX users (like myself) are no experts in this language, you can just use ChatGPT or other related tools to generate these expressions.

Field Names

It’s important to emphasize that field names are also part of the LLM’s input, which means they need to be meaningful and clear. As demonstrated in the schema description example, the wording of field names should align with the naming conventions used in the documents being processed. If users need to utilize different field identifiers for further processing, they can do so by using the ‘Label’ cell in the schema.

For more practical examples of field names and other related topics, you can check out the DOX help page: https://help.sap.com/docs/document-information-extraction/document-information-extraction/extraction-using-generative-ai?locale=en-US

Summary

With DOX Premium, a prompt engineer has the flexibility to get creative and guide the model in a desired direction. Throughout the process of integrating this solution, it is crucial to align the model’s behavior with the specific needs of the business. Since extracted data is often processed further in the workflow, ensuring its accuracy is essential.

Regular feedback loops between business and IT teams are invaluable for improving the service’s usability and overall value. These ongoing interactions help to continuously refine prompts and schema descriptions, ensuring the solution delivers consistent and reliable results.

New Features

Instant Learning

The instant learning feature leverages a few-shot prompting approach to significantly enhance the AI model’s understanding through real-time user feedback. In a few-shot prompting approach, the AI model is provided with several examples to help it better understand and execute a particular task. This method proves particularly effective for improving accuracy in complex tasks. For example, in sentiment classification, the model’s output can be refined by supplying examples of the desired result:

This is awesome! // Negative

This is bad! // Positive

Wow that movie was rad! // Positive

What a horrible show! //

Users for now need to select the Setup Type ‘auto’ and exclude the default extractors to activate the feedback mechanism. Once enabled, they can review and correct the extracted values. This feedback, which functions as a few-shot prompt, helps the system learn about the document layout and the corrections. The knowledge gained is then used to improve extraction accuracy for future tasks with similar document layouts.

In the screenshots below, you can see the process in the DOX UI, where I selected a simple example of a naive address definition and made adjustments to refine the extraction for the address field:

Edit the document

Apply and confirm the corrections

Next incoming (similar) document

The last screenshot shows the updated extraction when uploading the document again with the same schema. The model now extracts the correct address from the top of the document rather than the shipping address. This change persists as long as the confirmed document remains stored in the backend, ensuring consistent extractions for similar future documents.

Data Type: List of Values

The new “List of Values” data type enables classification scenarios within DOX Premium and can significantly improve extraction performance for specific fields. This feature allows users to define expected output fields for extraction, which helps guide the large language model (LLM) in its predictions.

For instance, if a user needs to classify documents as either invoices or debit notes, they can define those values in the list. The LLM will then analyze the entire document to make an informed classification. Below is an example of how this field is set up in the DOX UI:

In this case, you can also leverage the field description and the description of the list values to provide additional instructions or hints to the LLM. Another possible application of this feature is field-level classification, such as identifying the unit of measure. Here, users can define a list of possible outputs, like Kilograms or Tons, which the model will reference during extraction.

Conclusion & Next Steps

We hope this blog has demonstrated some useful hacks that you can apply to your next DOX project or use case. We would love to hear your feedback on how these techniques are working with your specific documents, or if you have additional ideas on how to improve the extraction process.

If you’re still unsure whether DOX Premium is the right solution for your document needs, please don’t hesitate to reach out to me or my colleagues on the AI RIG Team. We offer dedicated support to help activate the service and enable end users to successfully implement it. Additionally, we can review your documents and conduct a small Proof of Concept (PoC) using 10-20 documents, along with a live demo of the results.

Document Information Extraction – PremiumWith the General Availability (GA) of Document Information Extraction, premium edition (DOX Premium), users now have the power to leverage Generative AI (GenAI) for extracting entities from structured documents. This cutting-edge capability allows for processing a wide variety of document types, in almost every language. However, as with most GenAI-driven applications, the success of the extraction process heavily depends on the quality of the prompts given to the model.This blog post explores best practices for using GenAI in document extraction, focusing on optimizing performance through effective prompt engineering, refining field descriptions, and ensuring continuous improvement. By applying these insights, you can maximize the potential of GenAI-driven document processing in your organization.The process of iterative prompt developmentWhen working with large language models, it’s essential to understand that the first query is rarely perfect. For each field requiring extraction, we begin by writing a base prompt that captures essential information (such as position, format, or context) about the entity. Once we review the results, we often need to refine the prompt based on the initial output.However, a word of caution: modifying the description of one field can impact the performance of other fields. Therefore, in DOX Premium, any adjustments to field descriptions should be followed by an analysis of all fields, not just those that were changed. This iterative process continues until we achieve satisfactory results.Steps for Iterative Prompt Development:Identify the field: Decide which field or specific extraction requires adjustment.Write or refine the prompt: Create a clear, specific base description.Review the results: Analyze the output for accuracy.Perform error analysis: Identify mistakes and discrepancies.Repeat: Iterate until the desired result is achieved. Best Practices Leverage Default ExtractorsBefore diving into custom prompt development, take advantage of the default extractors provided by DOX Premium. These extractors are designed to select the best possible model for any given scenario—whether it’s one of our own pre-trained models or a GenAI model. Trained on hundreds of thousands of business documents, they are highly accurate for common fields and document types, making them a valuable resource for initial extraction efforts. It’s beneficial to check the performance of these pre-trained models, especially when the language is supported by them.Activating these default extractors before relying on generative AI can help you assess whether further customization is needed. In many cases, combining pre-trained models with generative AI yields optimal results for specific use cases. Here’s a quick guide on how to activate the default extractors:Clear and concise field descriptionsUsing clear and concise language to describe fields as part of a prompt significantly improves extraction accuracy in DOX Premium. Effective field descriptions help the model understand the specific data to extract, reducing ambiguity and errors. This is particularly important when similar fields exist, and you want to guide the model in a specific direction.Field descriptions can also be tailored to manipulate the output format or language. In the following example from a delivery note, we compare a basic prompt with a more guided approach that provides clearer instructions. Don’tDoExtract the countryExtract the country from the address. Please translate the country into english.Extract the delivery conditionsExtract the delivery conditions. It is sufficient to extract only the abbreviation of the delivery condition mentioned on the document Material referenceMaterial DescriptionExtraction beforeExtraction afterIn the example, we clarified the country extraction and achieved the desired outcome of translating the country name into English. For delivery conditions, specifying that only the abbreviation should be extracted helped produce a more accurate result. The last example highlights that even semantically close descriptions can generate different outputs.Not all business documents follow such a clear, labeled structure. For instance, many purchase orders include material numbers or codes that may not have explicit labels or indicators on the document. In these cases, understanding the entities—such as recognizing a specific format or consistent positioning on the document—becomes essential. Once this knowledge is gathered, you can improve extraction by incorporating these specifics into the field descriptions.Schema descriptionDOX Premium now allows you to define schema descriptions, which serve as general instructions to the large language model (LLM) before the schema and field-specific descriptions are applied. These schema descriptions can enhance the extraction process at the document level without focusing on individual fields.One effective approach is to role-play with the LLM by assigning it a persona. For example:“You are an expert in extracting purchase orders from company X, which are related to products Y.”This instruction can be followed by more general directives, such as specifying language preferences or emphasizing certain priorities. Schema descriptions can also help clarify correlations between fields. For instance, you can instruct the LLM to differentiate between the sending and receiving entities:“Company X is always the receiver/supplier of the purchase order.”In the screenshot example, we demonstrate a schema description being used to guide the model’s understanding of a nested structure within a delivery note. In this case, the line item table contains multiple material codes that may be linked to each other. The schema description informs the LLM to treat all nested material codes as part of a single line item:“The material codes in the line item table can have a nested structure, meaning that subsequent codes can belong to each other. Please extract all the nested material codes as one line item.”Extraction of nested structure with schema descriptionThis approach helps the model accurately interpret and extract data even from complex or hierarchical document structures.Regular ExpressionsIf you want to extract numbers that have a standardised format, it can help to use regular expressions for that in the field description. Regular expressions are like a smart search tool that helps you find specific patterns or sequences of text in a large amount of data. For example, if you are pretty sure that the article number on purchase orders has a structure of ‘xxx.xx.xx’, where x is a digit between 0 and 0, you can use the following regular expressions: d{3}.d{2}.d{2}. As most of the DOX users (like myself) are no experts in this language, you can just use ChatGPT or other related tools to generate these expressions.Field NamesIt’s important to emphasize that field names are also part of the LLM’s input, which means they need to be meaningful and clear. As demonstrated in the schema description example, the wording of field names should align with the naming conventions used in the documents being processed. If users need to utilize different field identifiers for further processing, they can do so by using the ‘Label’ cell in the schema.For more practical examples of field names and other related topics, you can check out the DOX help page: https://help.sap.com/docs/document-information-extraction/document-information-extraction/extraction-using-generative-ai?locale=en-USSummaryWith DOX Premium, a prompt engineer has the flexibility to get creative and guide the model in a desired direction. Throughout the process of integrating this solution, it is crucial to align the model’s behavior with the specific needs of the business. Since extracted data is often processed further in the workflow, ensuring its accuracy is essential.Regular feedback loops between business and IT teams are invaluable for improving the service’s usability and overall value. These ongoing interactions help to continuously refine prompts and schema descriptions, ensuring the solution delivers consistent and reliable results. New FeaturesInstant LearningThe instant learning feature leverages a few-shot prompting approach to significantly enhance the AI model’s understanding through real-time user feedback. In a few-shot prompting approach, the AI model is provided with several examples to help it better understand and execute a particular task. This method proves particularly effective for improving accuracy in complex tasks. For example, in sentiment classification, the model’s output can be refined by supplying examples of the desired result: This is awesome! // Negative

This is bad! // Positive

Wow that movie was rad! // Positive

What a horrible show! // Users for now need to select the Setup Type ‘auto’ and exclude the default extractors to activate the feedback mechanism. Once enabled, they can review and correct the extracted values. This feedback, which functions as a few-shot prompt, helps the system learn about the document layout and the corrections. The knowledge gained is then used to improve extraction accuracy for future tasks with similar document layouts.In the screenshots below, you can see the process in the DOX UI, where I selected a simple example of a naive address definition and made adjustments to refine the extraction for the address field:Edit the documentApply and confirm the correctionsNext incoming (similar) documentThe last screenshot shows the updated extraction when uploading the document again with the same schema. The model now extracts the correct address from the top of the document rather than the shipping address. This change persists as long as the confirmed document remains stored in the backend, ensuring consistent extractions for similar future documents.Data Type: List of ValuesThe new “List of Values” data type enables classification scenarios within DOX Premium and can significantly improve extraction performance for specific fields. This feature allows users to define expected output fields for extraction, which helps guide the large language model (LLM) in its predictions.For instance, if a user needs to classify documents as either invoices or debit notes, they can define those values in the list. The LLM will then analyze the entire document to make an informed classification. Below is an example of how this field is set up in the DOX UI:In this case, you can also leverage the field description and the description of the list values to provide additional instructions or hints to the LLM. Another possible application of this feature is field-level classification, such as identifying the unit of measure. Here, users can define a list of possible outputs, like Kilograms or Tons, which the model will reference during extraction. Conclusion & Next Steps We hope this blog has demonstrated some useful hacks that you can apply to your next DOX project or use case. We would love to hear your feedback on how these techniques are working with your specific documents, or if you have additional ideas on how to improve the extraction process.If you’re still unsure whether DOX Premium is the right solution for your document needs, please don’t hesitate to reach out to me or my colleagues on the AI RIG Team. We offer dedicated support to help activate the service and enable end users to successfully implement it. Additionally, we can review your documents and conduct a small Proof of Concept (PoC) using 10-20 documents, along with a live demo of the results.christoph.batke@sap.comsap_ai_rig@sap.com Read More Technology Blogs by SAP articles

#SAP

#SAPTechnologyblog