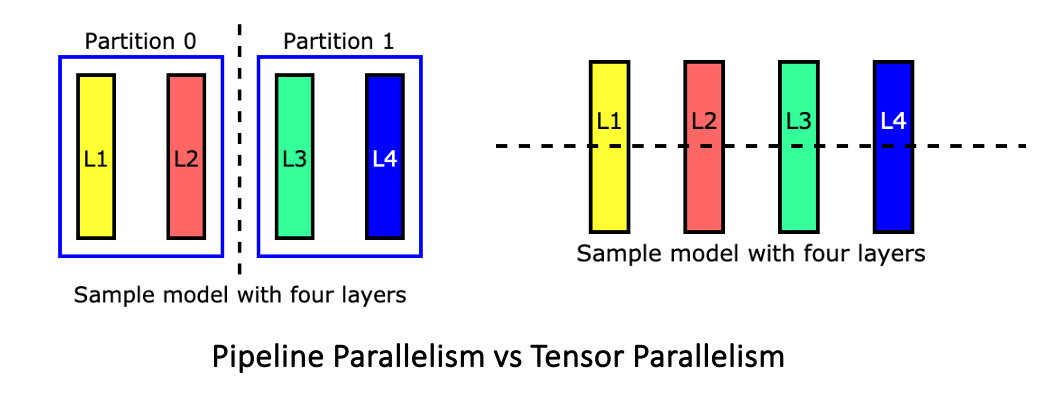

As models increase in size, it becomes impossible to fit them in a single GPU for inference.

As models increase in size, it becomes impossible to fit them in a single GPU for inference.Continue reading on Thomson Reuters Labs » Read More Llm on Medium

#AI