Introduction

The last few years has seen a surge in worldwide interest in Artificial Technology (AI). These days, it may feel like no matter where you look you can’t avoid hearing about AI’s latest innovations (Bain & Company), billion dollar investments (SAP Investment) or how it’s going to transform our way of life (MIT).

The surge in AI’s popularity can be partly attributed to recent breakthroughs in Large Language Models and Generative AI, which have lowered the barrier to entry and enabled non-technical users to engage with and benefit from the technology like never before. However, with this popularity, it can be easy to get confused by the manyof terms and concepts that often seem interchangeable from an outsider’s perspective.

To help clear up the confusion, this blog aims to provide a concise definition of AI and highlight key innovations that have emerged over the years. While AI is a vast field with each research area deserving its own detailed discussion, we’ll keep things brief by focusing on a high-level overview of each area and the context surrounding them. By the end of this blog, you’ll have the historical and contextual foundation needed to dive deeper into specific areas of AI.

Key Areas at a glance

This blog will explore these areas in more detail, but to start, it’s helpful to think of them as parent and child topics. While this isn’t always perfectly accurate (for example, not all Generative AI comes from deep learning), it’s a good way to see how they relate.

(Reference: https://www.ibm.com/topics/artificial-intelligence, https://cloud.google.com/learn/what-is-artificial-intelligence)

What is AI?

Definition:

Artificial Intelligence isn’t a single technology or software. Rather, it’s the term used to describe the broad set of technologies that enable computers to perform tasks that require human-like intellectual abilities. The exact nature of “human-like intellectual abilities” is still up for debate, however most experts would agree it involves the ability to learn, reason, problem solve and act.

Context:

While AI is often linked with modern technology, the concept has existed in human culture for centuries. An early example is Talos from Greek mythology—a giant bronze automaton created around 300 BCE to protect Crete from pirates (link https://en.wikipedia.org/wiki/Talos).

Here is a photo of Talos generated using one of the many online AI tools available. There are several tools available without a paywall you can try out yourself by googling “AI Picture Generator”. These sites are not affiliated with SAP.

However, the field of AI as a formal area of study is widely recognized to have originated in the 1950s and 1960s, with two significant milestones. The first was Alan Turing’s 1950 research article “Computing Machinery and Intelligence,” which posed the question “Can machines think?” and introduced “the imitation game” (later called the “Turing Test”) to evaluate a machine’s ability to exhibit human-like intelligence. The second was the 1956 Dartmouth Summer Research Project, organized by John McCarthy, which served as a pivotal six-week brainstorming session on AI. Together, these events are credited with founding AI as a research discipline, and Turing and McCarthy are often considered the fathers of AI.

(Reference: https://en.wikipedia.org/wiki/History_of_artificial_intelligence, https://www.britannica.com/science/history-of-artificial-intelligence, https://qbi.uq.edu.au/brain/intelligent-machines/history-artificial-intelligence)

What is Symbolic and Non Symbolic AI

Definition:

Serving as two sub-categories of the broader research area of AI, symbolic AI and non-symbolic AI (also known as sub-symbolic AI) represent different approaches to artificial intelligence. Symbolic AI involves the use of high-level, human-readable symbols and rules to represent knowledge and solve problems. It relies on explicit representations of the world and the use of logical reasoning processes to manipulate these representations.

Non-symbolic AI, in contrast, focuses on processing data and learning patterns without relying on explicit, human-readable symbols or rules. Instead of using predefined logic and symbolic representations, non-symbolic AI employs methods that learn from data through statistical, probabilistic, and computational models.

Context:

After AI was founded as a research area, many different approaches were attempted (including several early examples of machine learning). Over time, these approaches generally fell into two camps: symbolic and non-symbolic AI. Due to a number of practical and human-centered reasons, initial successes in the AI space were mostly driven by symbolic AI. This was because symbolic AI used approaches such as rule-based systems, which were easily understood by human creators and were more feasible given the computing limitations of the time. A prominent example of a symbolic AI application is MYCIN, an early expert system developed in the 1970s at Stanford University. MYCIN was designed to assist medical professionals in diagnosing bacterial infections and recommending appropriate antibiotic treatments. It is one of the most cited and significant examples of symbolic AI in action. Here’s a closer look at how MYCIN works and why it is important:

MYCIN’s knowledge base consists of around 600 rules that encode expert knowledge about bacterial infections and corresponding antibiotic treatments. These rules are typically of the if-then variety, such as:

It would query the physician running the program via a long series of simple yes/no or textual questions.

Symbolic systems such as these are still prevalent today. However, due to the rule-based nature of these programs they struggle with computational costs, applying rules in undefined domains, and handling ambiguous information. These limitations soon caused research to hit several dead ends, leading to periods known as “AI Winters,” where interest (and funding) in AI dwindled significantly.

Researches soon started to focus on other approaches to AI, such as “connectionism”, “soft computing”, and “reinforcement learning”. These approaches did not follow the same logical processes and thus were called “non-symbolic.” Most of the AI innovations we see today, such as machine learning, deep learning, and generative AI are the result of the success of these non-symbolic approaches.

(Reference: https://en.wikipedia.org/wiki/Symbolic_artificial_intelligence, https://www.datacamp.com/blog/what-is-symbolic-ai, https://wou.edu/las/cs/csclasses/cs160/VTCS0/AI/Lessons/ExpertSystems/Lesson.html)

What is Machine Learning?

Definition

Machine Learning (ML) is a subfield of non-symbolic AI, which itself is a subfield of artificial intelligence (AI). It’s used to describe a range of approaches and techniques used to enable computers to learn from and make decisions or predictions based on data. This means, the term “Machine Learning” isn’t a technology in of itself but a category of technologies.

Context:

Machine learning comes in several forms including supervised learning, unsupervised learning, and reinforcement learning (there are more but these are the main ones). These forms describe the process the machine takes to learning from the data inputs. Within each Machine Learning form there are several algorithms available to achieve the learning style. For supervised learning for example, decision tree and linear regression algorithms are commonly used (deep dives into these algorithms are not covered here). It’s important to note that before data is fed into the Machine Learning model it needs to be cleaned and have the relevant data features identified (data features are the measures you want the model to act upon). This process of selecting features requires direct involvement from the Machine Learning engineer with significant domain expertise.

Machine Learning techniques can be seen all the way back in the 1960’s. however it wasn’t until the 1980’s where major strides were really seen. This was due to the fact that Machine Learning required more computing power and data that what was readily available at the time. As the world advanced however, elements like the internet and Moore’s Law removed these constraints allowing more innovations to come from Machine Learning.

Informally, machine learning algorithms can be split into two key categories: traditional machine learning and deep learning. Traditional learning algorithms often rely on explicit programming and feature engineering by human experts. More recently, these techniques have been advanced through innovations in Deep Learning so that less reliance on this manual pre-processing is required.

(Reference: https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-explained, https://www.ibm.com/topics/machine-learning, https://en.wikipedia.org/wiki/Machine_learning)

What is deep learning?

Definition

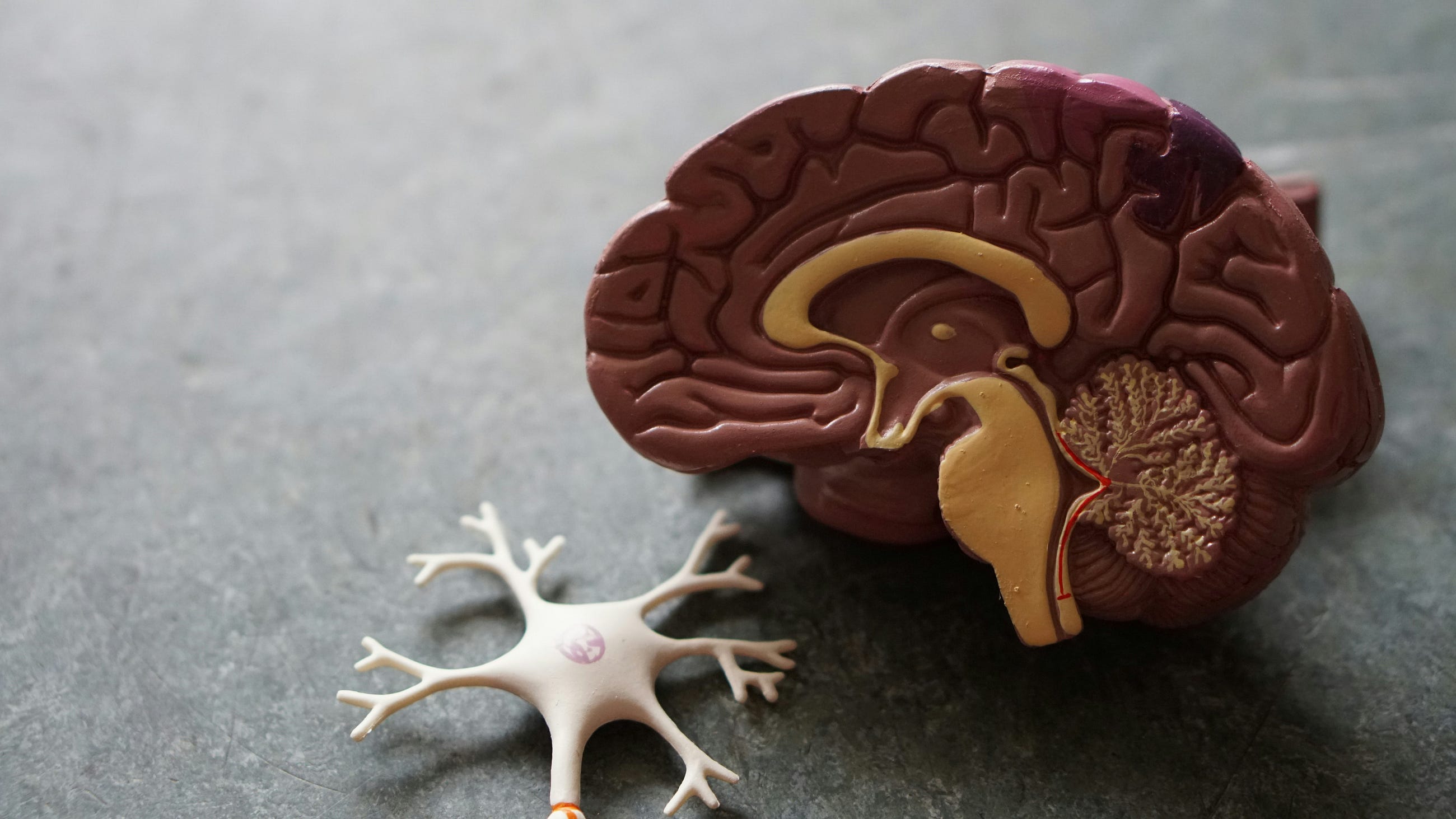

Deep learning is an evolution of machine learning that uses artificial neural networks (ANNs) to function.

An artificial neural network (ANN) is a model inspired by the brain’s structure. It has nodes (similar to neurons) connected by edges (similar to synapses). When data is input into the network, it passes from one node to another. Each node processes the data a bit more before sending it to the next node. This layered processing helps extract useful insights from the data each step of the way which leads to better results. The “Deep” in deep learning refers to the number of layers used in the ANN’s.

For example, in an image recognition model, the raw input may be an image. The first representational layer may attempt to identify basic shapes such as lines and circles, the second layer may detect arrangements of edges, the third layer may detect a nose and eyes, and the fourth layer may recognize that the image contains a face.

This of course is a very simple example and to perform a similar task requires a much more complex system as well as the steps involved are not as clear as this as it can be hard to fully understand the reasoning of each step. These layers are often described as the “Hidden Layer” because of this.

Context

Much like Machine Learning, Deep Learning was also propelled forwards by Moore’s Law. The architecture for deep learning has been known for some time, with examples dating back to the 1960s. However, these models required powerful hardware and massive amounts of data that wasn’t available until recently.

Unlike most traditional machine learning techniques, deep learning has the ability to automatically learn relevant features directly from the raw data. This reduces the need for manual feature engineering, where humans have to define and transform the data features into a format suitable for the algorithm. The below diagram attempts to show this.

Now that deep learning has become common place is traditional machine learning obsolete?

No. It highly depends on the task, timing and resources available.

In general, deep learning has the following benefits:

Reduced Need for Feature Engineering: Deep learning models automatically learn features from raw data, saving time and reducing the need for manual intervention.Performance on Complex Data: Excels with complex, high-dimensional data such as images, audio, and video.Effectiveness with Large Datasets: Deep learning models perform well in areas where there is a lot of data available.

However, deep learning also has some drawbacks compared to classical machine learning:

Higher Computational Power: Deep learning requires significant computational resources, often involving GPUs or TPUs.Greater Data Requirements: Large amounts of labelled data are typically necessary to train effective deep learning models.Longer Training Times: Training deep learning models can be time-consuming due to their complexity and data requirements.Lower transparency: The internal workings of deep learning models can be opaque, making it difficult to understand and interpret the decision-making processes.

In contrast, classical machine learning techniques:

Lower Data Requirements: Can perform well with smaller datasets.Less Computational Demand: Typically require less computational power compared to deep learning.Faster Training Times: Generally quicker to train, making them suitable for rapid development and iteration.Higher Transparency: More transparent, allowing for better understanding and explanation of how the model arrives at its decisions.

(Reference: https://levity.ai/blog/difference-machine-learning-deep-learning, https://aws.amazon.com/what-is/deep-learning/#:~:text=Deep%20learning%20is%20a%20method,produce%20accurate%20insights%20and%20predictions, https://www.ibm.com/topics/deep-learning, https://www.techtarget.com/searchenterpriseai/definition/deep-learning-deep-neural-network)

What is generative AI

Definition:

Generative AI refers to a type of Artificial Intelligence that can produce new content, such as text, imagery, audio, and more, based on the data it was trained on. Unlike traditional AI systems, which primarily rely on detecting patterns in pre-existing data to perform tasks, generative AI has the unique capability to create original content that did not exist in the training data.

Context:

It’s important to note that Generative AI as a field of study is separate to Deep Learning and Machine Learning however most of the new generative AI applications we see today are modelled off deep learning techniques.

(Reference: https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-generative-ai, https://research.ibm.com/blog/what-is-generative-AI)

Large Language Models:

Definition:

A large language model (LLM) is a computer model designed for natural language processing (NLP) tasks. NLP, in this context, is a subset of artificial intelligence (AI) that aims to enable computers to understand, interpret, and generate human language. Today’s LLMs primarily use deep learning techniques, although they may incorporate concepts from classical machine learning as part of their development or in combination with other approaches to enhance their capabilities.

Context:

LLMs are largely the reason for the latest boom in interest in AI through providing non-technical users a easy to consume UI that allows them to use and communicate with more complex AI technology.

(Reference: https://www.cloudflare.com/en-gb/learning/ai/what-is-large-language-model/, https://www.ibm.com/topics/large-language-models)

IntroductionThe last few years has seen a surge in worldwide interest in Artificial Technology (AI). These days, it may feel like no matter where you look you can’t avoid hearing about AI’s latest innovations (Bain & Company), billion dollar investments (SAP Investment) or how it’s going to transform our way of life (MIT).The surge in AI’s popularity can be partly attributed to recent breakthroughs in Large Language Models and Generative AI, which have lowered the barrier to entry and enabled non-technical users to engage with and benefit from the technology like never before. However, with this popularity, it can be easy to get confused by the manyof terms and concepts that often seem interchangeable from an outsider’s perspective. To help clear up the confusion, this blog aims to provide a concise definition of AI and highlight key innovations that have emerged over the years. While AI is a vast field with each research area deserving its own detailed discussion, we’ll keep things brief by focusing on a high-level overview of each area and the context surrounding them. By the end of this blog, you’ll have the historical and contextual foundation needed to dive deeper into specific areas of AI. Key Areas at a glance This blog will explore these areas in more detail, but to start, it’s helpful to think of them as parent and child topics. While this isn’t always perfectly accurate (for example, not all Generative AI comes from deep learning), it’s a good way to see how they relate. (Reference: https://www.ibm.com/topics/artificial-intelligence, https://cloud.google.com/learn/what-is-artificial-intelligence) What is AI? Definition:Artificial Intelligence isn’t a single technology or software. Rather, it’s the term used to describe the broad set of technologies that enable computers to perform tasks that require human-like intellectual abilities. The exact nature of “human-like intellectual abilities” is still up for debate, however most experts would agree it involves the ability to learn, reason, problem solve and act.Context:While AI is often linked with modern technology, the concept has existed in human culture for centuries. An early example is Talos from Greek mythology—a giant bronze automaton created around 300 BCE to protect Crete from pirates (link https://en.wikipedia.org/wiki/Talos). Here is a photo of Talos generated using one of the many online AI tools available. There are several tools available without a paywall you can try out yourself by googling “AI Picture Generator”. These sites are not affiliated with SAP. However, the field of AI as a formal area of study is widely recognized to have originated in the 1950s and 1960s, with two significant milestones. The first was Alan Turing’s 1950 research article “Computing Machinery and Intelligence,” which posed the question “Can machines think?” and introduced “the imitation game” (later called the “Turing Test”) to evaluate a machine’s ability to exhibit human-like intelligence. The second was the 1956 Dartmouth Summer Research Project, organized by John McCarthy, which served as a pivotal six-week brainstorming session on AI. Together, these events are credited with founding AI as a research discipline, and Turing and McCarthy are often considered the fathers of AI. (Reference: https://en.wikipedia.org/wiki/History_of_artificial_intelligence, https://www.britannica.com/science/history-of-artificial-intelligence, https://qbi.uq.edu.au/brain/intelligent-machines/history-artificial-intelligence) What is Symbolic and Non Symbolic AIDefinition:Serving as two sub-categories of the broader research area of AI, symbolic AI and non-symbolic AI (also known as sub-symbolic AI) represent different approaches to artificial intelligence. Symbolic AI involves the use of high-level, human-readable symbols and rules to represent knowledge and solve problems. It relies on explicit representations of the world and the use of logical reasoning processes to manipulate these representations.Non-symbolic AI, in contrast, focuses on processing data and learning patterns without relying on explicit, human-readable symbols or rules. Instead of using predefined logic and symbolic representations, non-symbolic AI employs methods that learn from data through statistical, probabilistic, and computational models.Context:After AI was founded as a research area, many different approaches were attempted (including several early examples of machine learning). Over time, these approaches generally fell into two camps: symbolic and non-symbolic AI. Due to a number of practical and human-centered reasons, initial successes in the AI space were mostly driven by symbolic AI. This was because symbolic AI used approaches such as rule-based systems, which were easily understood by human creators and were more feasible given the computing limitations of the time. A prominent example of a symbolic AI application is MYCIN, an early expert system developed in the 1970s at Stanford University. MYCIN was designed to assist medical professionals in diagnosing bacterial infections and recommending appropriate antibiotic treatments. It is one of the most cited and significant examples of symbolic AI in action. Here’s a closer look at how MYCIN works and why it is important:MYCIN’s knowledge base consists of around 600 rules that encode expert knowledge about bacterial infections and corresponding antibiotic treatments. These rules are typically of the if-then variety, such as: It would query the physician running the program via a long series of simple yes/no or textual questions.Symbolic systems such as these are still prevalent today. However, due to the rule-based nature of these programs they struggle with computational costs, applying rules in undefined domains, and handling ambiguous information. These limitations soon caused research to hit several dead ends, leading to periods known as “AI Winters,” where interest (and funding) in AI dwindled significantly.Researches soon started to focus on other approaches to AI, such as “connectionism”, “soft computing”, and “reinforcement learning”. These approaches did not follow the same logical processes and thus were called “non-symbolic.” Most of the AI innovations we see today, such as machine learning, deep learning, and generative AI are the result of the success of these non-symbolic approaches. (Reference: https://en.wikipedia.org/wiki/Symbolic_artificial_intelligence, https://www.datacamp.com/blog/what-is-symbolic-ai, https://wou.edu/las/cs/csclasses/cs160/VTCS0/AI/Lessons/ExpertSystems/Lesson.html) What is Machine Learning?DefinitionMachine Learning (ML) is a subfield of non-symbolic AI, which itself is a subfield of artificial intelligence (AI). It’s used to describe a range of approaches and techniques used to enable computers to learn from and make decisions or predictions based on data. This means, the term “Machine Learning” isn’t a technology in of itself but a category of technologies.Context: Machine learning comes in several forms including supervised learning, unsupervised learning, and reinforcement learning (there are more but these are the main ones). These forms describe the process the machine takes to learning from the data inputs. Within each Machine Learning form there are several algorithms available to achieve the learning style. For supervised learning for example, decision tree and linear regression algorithms are commonly used (deep dives into these algorithms are not covered here). It’s important to note that before data is fed into the Machine Learning model it needs to be cleaned and have the relevant data features identified (data features are the measures you want the model to act upon). This process of selecting features requires direct involvement from the Machine Learning engineer with significant domain expertise. Machine Learning techniques can be seen all the way back in the 1960’s. however it wasn’t until the 1980’s where major strides were really seen. This was due to the fact that Machine Learning required more computing power and data that what was readily available at the time. As the world advanced however, elements like the internet and Moore’s Law removed these constraints allowing more innovations to come from Machine Learning. Informally, machine learning algorithms can be split into two key categories: traditional machine learning and deep learning. Traditional learning algorithms often rely on explicit programming and feature engineering by human experts. More recently, these techniques have been advanced through innovations in Deep Learning so that less reliance on this manual pre-processing is required. (Reference: https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-explained, https://www.ibm.com/topics/machine-learning, https://en.wikipedia.org/wiki/Machine_learning) What is deep learning?DefinitionDeep learning is an evolution of machine learning that uses artificial neural networks (ANNs) to function. An artificial neural network (ANN) is a model inspired by the brain’s structure. It has nodes (similar to neurons) connected by edges (similar to synapses). When data is input into the network, it passes from one node to another. Each node processes the data a bit more before sending it to the next node. This layered processing helps extract useful insights from the data each step of the way which leads to better results. The “Deep” in deep learning refers to the number of layers used in the ANN’s. For example, in an image recognition model, the raw input may be an image. The first representational layer may attempt to identify basic shapes such as lines and circles, the second layer may detect arrangements of edges, the third layer may detect a nose and eyes, and the fourth layer may recognize that the image contains a face. This of course is a very simple example and to perform a similar task requires a much more complex system as well as the steps involved are not as clear as this as it can be hard to fully understand the reasoning of each step. These layers are often described as the “Hidden Layer” because of this. ContextMuch like Machine Learning, Deep Learning was also propelled forwards by Moore’s Law. The architecture for deep learning has been known for some time, with examples dating back to the 1960s. However, these models required powerful hardware and massive amounts of data that wasn’t available until recently. Unlike most traditional machine learning techniques, deep learning has the ability to automatically learn relevant features directly from the raw data. This reduces the need for manual feature engineering, where humans have to define and transform the data features into a format suitable for the algorithm. The below diagram attempts to show this. Now that deep learning has become common place is traditional machine learning obsolete? No. It highly depends on the task, timing and resources available. In general, deep learning has the following benefits:Reduced Need for Feature Engineering: Deep learning models automatically learn features from raw data, saving time and reducing the need for manual intervention.Performance on Complex Data: Excels with complex, high-dimensional data such as images, audio, and video.Effectiveness with Large Datasets: Deep learning models perform well in areas where there is a lot of data available. However, deep learning also has some drawbacks compared to classical machine learning:Higher Computational Power: Deep learning requires significant computational resources, often involving GPUs or TPUs.Greater Data Requirements: Large amounts of labelled data are typically necessary to train effective deep learning models.Longer Training Times: Training deep learning models can be time-consuming due to their complexity and data requirements.Lower transparency: The internal workings of deep learning models can be opaque, making it difficult to understand and interpret the decision-making processes. In contrast, classical machine learning techniques:Lower Data Requirements: Can perform well with smaller datasets.Less Computational Demand: Typically require less computational power compared to deep learning.Faster Training Times: Generally quicker to train, making them suitable for rapid development and iteration.Higher Transparency: More transparent, allowing for better understanding and explanation of how the model arrives at its decisions. (Reference: https://levity.ai/blog/difference-machine-learning-deep-learning, https://aws.amazon.com/what-is/deep-learning/#:~:text=Deep%20learning%20is%20a%20method,produce%20accurate%20insights%20and%20predictions, https://www.ibm.com/topics/deep-learning, https://www.techtarget.com/searchenterpriseai/definition/deep-learning-deep-neural-network) What is generative AIDefinition:Generative AI refers to a type of Artificial Intelligence that can produce new content, such as text, imagery, audio, and more, based on the data it was trained on. Unlike traditional AI systems, which primarily rely on detecting patterns in pre-existing data to perform tasks, generative AI has the unique capability to create original content that did not exist in the training data.Context:It’s important to note that Generative AI as a field of study is separate to Deep Learning and Machine Learning however most of the new generative AI applications we see today are modelled off deep learning techniques. (Reference: https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-generative-ai, https://research.ibm.com/blog/what-is-generative-AI) Large Language Models:Definition:A large language model (LLM) is a computer model designed for natural language processing (NLP) tasks. NLP, in this context, is a subset of artificial intelligence (AI) that aims to enable computers to understand, interpret, and generate human language. Today’s LLMs primarily use deep learning techniques, although they may incorporate concepts from classical machine learning as part of their development or in combination with other approaches to enhance their capabilities.Context:LLMs are largely the reason for the latest boom in interest in AI through providing non-technical users a easy to consume UI that allows them to use and communicate with more complex AI technology.(Reference: https://www.cloudflare.com/en-gb/learning/ai/what-is-large-language-model/, https://www.ibm.com/topics/large-language-models) Read More Technology Blogs by SAP articles

#SAP

#SAPTechnologyblog