If you’re looking to set up an Acquired Data connection between SAP Analytics Cloud (SAC) and AWS Redshift, you can create an SQL connection directly from SAC using SAP Cloud Connector and SAP Cloud Agent. Check out my previous blog post for a step-by-step guide: How to create an imported connection between SAP Analytics Cloud and AWS Redshift

But if you need a Live Data connection, you will need a third-party software, or set up the SAP Data Provisioning Agent (DP Agent) and create this Live Data connection through an SAP HANA Cloud database instance on the SAP Business Technology Platform (SAP BTP). In this blog post, I’ll walk you through this process.

With this approach, we are adding an intermediate layer (SAP HANA Cloud) that not only enables the Live Data connection between SAC and Redshift, with an amazing performance and at an acceptable cost, but also brings additional benefits to SAC that are not possible with other approaches, including:

Integrate multiple sources: integrate data in SAC not only from Redshift, but also from other source systems: on-premise, cloud, SAP, non-SAP, via Virtual access or Replication.User Authorizations: implement user authorizations in SAC using SAP HANA’s authorization features, such as user groups, roles, and privileges (both static and dynamic).Connection Security: implement secure connections using multiple protocols (SAML, JWT, OpenID), data anonymization, encryption, access controls, certifications, and other built-in security features.Data Modeling: build data models in SAP HANA, enabling SAC functionalities like parameters, hierarchies, data blending, support for diverse data types, and more, that are not possible with other approaches.

Steps to follow

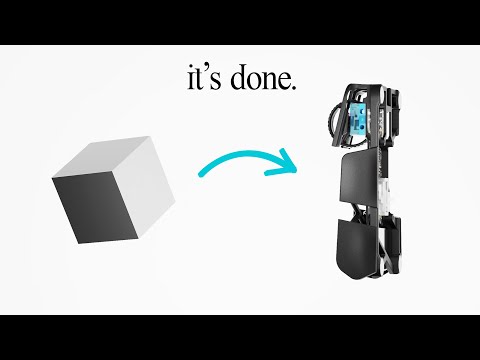

First, we will need to install and set up DP Agent on a dedicated server. After that, we will connect HANA Cloud to Redshift. Then, we will connect the Plain Schema with the HDI Container in HANA Cloud. Finally, we will create a Live Data connection from SAC to HANA Cloud.

Integration architecture

Step 1 – Connect Redshift with HANA Cloud

1.1 – DP Agent – Installation and configuration

Download the DP Agent – follow this link.Install the DP Agent on Windows – follow this link.Configure the DP Agent – follow this link.

1.2 – HANA DBX – Connect to AWS Redshift

Create a remote source using HANA Database Explorer – follow this link.

Step 2 – Connect Plain Schema with HDI Container in HANA Cloud

2.1 – HANA DBX – Create the schema and virtual objects

Create the schema where the virtual objects will be created, create the virtual objects in that schema, create the Application user, create the Access role for that schema, and assign the role to the Application user. This tutorial by Thomas Jung is outstanding.

2.2 – BTP Cockpit – Activate and configure BAS

Configure the BAS entitlement, subscribe to BAS, and grant user permissions – follow this link.

2.3 – BAS – Create a Dev Space and a HANA database project

Configure BAS following this tutorial by Thomas Jung.

2.4 – BTP Cockpit – Create a User-Provided HDI instance

You can create it from BAS, by adding a Database connection. The hdbgrants file is automatically created – follow steps 1 and 2 of this tutorial.

2.5 – BAS – Connect HDI container with schema

Create hdbsynonyms file and create the synonyms to connect the HDI container with the schema– follow steps 3 and 4 of this tutorial.

2.6 – BAS – Create a calculation view

Create a calculation view, and add the tables to the nodes using the previous synonyms – Follow this tutorial.

Step 3 – Connect HANA Cloud with SAC

3.1 – SAC – Create a live connection to HANA Cloud

Create a live data connection to SAP HANA Cloud using an “SAP HANA Cloud” connection. For single sign-on (SSO), you will need to enable a custom SAML identity provider – follow this link.

3.2 – SAC – Create a model

Create a model using the live data connection – follow this link.

3.3 – SAC – Create a story / insight

Create a new story (optimized) using the model – follow this link.You can also create an insight following this link.

Now you are ready to create stories in SAC using a live data connection from Redshift!

If you run into any issues along the way, feel free to leave a comment—I’d be happy to assist.

Kind regards,

Carlos Pinto

If you’re looking to set up an Acquired Data connection between SAP Analytics Cloud (SAC) and AWS Redshift, you can create an SQL connection directly from SAC using SAP Cloud Connector and SAP Cloud Agent. Check out my previous blog post for a step-by-step guide: How to create an imported connection between SAP Analytics Cloud and AWS RedshiftBut if you need a Live Data connection, you will need a third-party software, or set up the SAP Data Provisioning Agent (DP Agent) and create this Live Data connection through an SAP HANA Cloud database instance on the SAP Business Technology Platform (SAP BTP). In this blog post, I’ll walk you through this process.With this approach, we are adding an intermediate layer (SAP HANA Cloud) that not only enables the Live Data connection between SAC and Redshift, with an amazing performance and at an acceptable cost, but also brings additional benefits to SAC that are not possible with other approaches, including:Integrate multiple sources: integrate data in SAC not only from Redshift, but also from other source systems: on-premise, cloud, SAP, non-SAP, via Virtual access or Replication.User Authorizations: implement user authorizations in SAC using SAP HANA’s authorization features, such as user groups, roles, and privileges (both static and dynamic).Connection Security: implement secure connections using multiple protocols (SAML, JWT, OpenID), data anonymization, encryption, access controls, certifications, and other built-in security features.Data Modeling: build data models in SAP HANA, enabling SAC functionalities like parameters, hierarchies, data blending, support for diverse data types, and more, that are not possible with other approaches. Steps to followFirst, we will need to install and set up DP Agent on a dedicated server. After that, we will connect HANA Cloud to Redshift. Then, we will connect the Plain Schema with the HDI Container in HANA Cloud. Finally, we will create a Live Data connection from SAC to HANA Cloud. Integration architecture Step 1 – Connect Redshift with HANA Cloud1.1 – DP Agent – Installation and configurationDownload the DP Agent – follow this link.Install the DP Agent on Windows – follow this link.Configure the DP Agent – follow this link.1.2 – HANA DBX – Connect to AWS RedshiftCreate a remote source using HANA Database Explorer – follow this link. Step 2 – Connect Plain Schema with HDI Container in HANA Cloud2.1 – HANA DBX – Create the schema and virtual objectsCreate the schema where the virtual objects will be created, create the virtual objects in that schema, create the Application user, create the Access role for that schema, and assign the role to the Application user. This tutorial by Thomas Jung is outstanding.2.2 – BTP Cockpit – Activate and configure BASConfigure the BAS entitlement, subscribe to BAS, and grant user permissions – follow this link.2.3 – BAS – Create a Dev Space and a HANA database projectConfigure BAS following this tutorial by Thomas Jung.2.4 – BTP Cockpit – Create a User-Provided HDI instanceYou can create it from BAS, by adding a Database connection. The hdbgrants file is automatically created – follow steps 1 and 2 of this tutorial.2.5 – BAS – Connect HDI container with schemaCreate hdbsynonyms file and create the synonyms to connect the HDI container with the schema– follow steps 3 and 4 of this tutorial.2.6 – BAS – Create a calculation viewCreate a calculation view, and add the tables to the nodes using the previous synonyms – Follow this tutorial. Step 3 – Connect HANA Cloud with SAC3.1 – SAC – Create a live connection to HANA CloudCreate a live data connection to SAP HANA Cloud using an “SAP HANA Cloud” connection. For single sign-on (SSO), you will need to enable a custom SAML identity provider – follow this link.3.2 – SAC – Create a modelCreate a model using the live data connection – follow this link.3.3 – SAC – Create a story / insightCreate a new story (optimized) using the model – follow this link.You can also create an insight following this link. Now you are ready to create stories in SAC using a live data connection from Redshift!If you run into any issues along the way, feel free to leave a comment—I’d be happy to assist.Kind regards,Carlos PintoSaclab Consulting Read More Technology Blogs by Members articles

#SAP

#SAPTechnologyblog