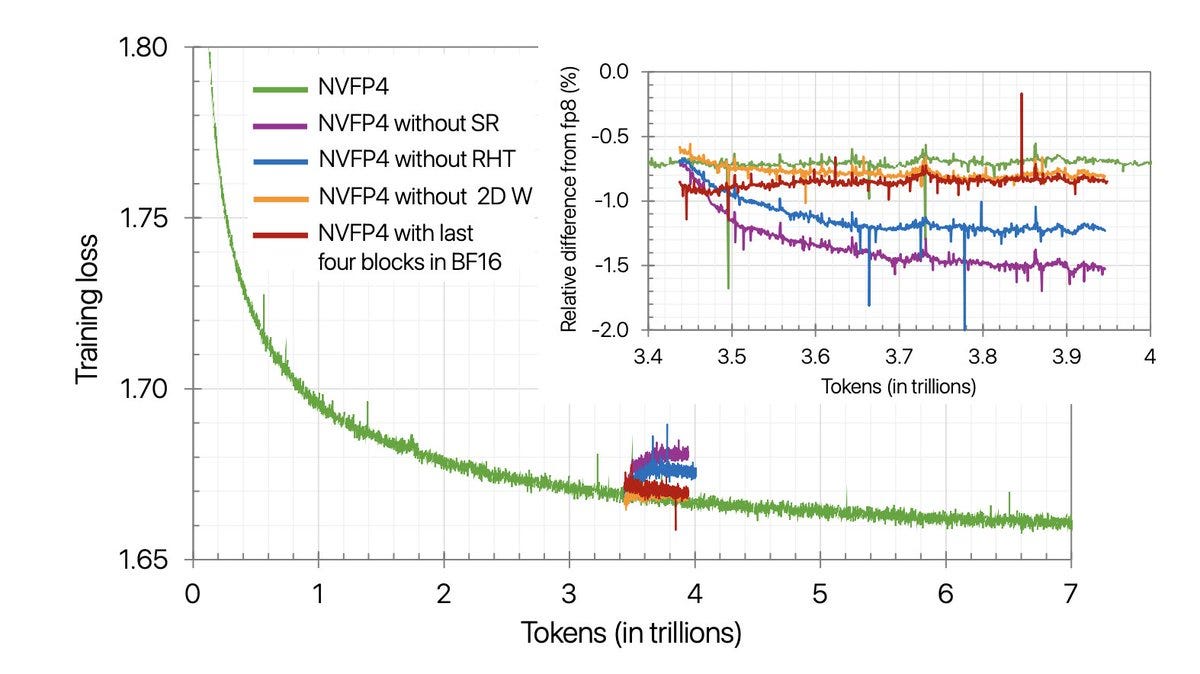

Okay, this one is wild. NVIDIA just trained a 12-billion-parameter language model on 10 trillion tokens — using only 4-bit precision. Yeah…

Okay, this one is wild. NVIDIA just trained a 12-billion-parameter language model on 10 trillion tokens — using only 4-bit precision. Yeah…Continue reading on Coding Nexus » Read More Llm on Medium

#AI

![[UPDATE] Adolf Silva Airlifted from Rampage Venue After Horrific Double Flip Crash](https://ep1.pinkbike.org/p2pb28910658/p2pb28910658.jpg)

![LIVE: Red Bull Rampage 2025 Men’s Final Results [Competition on Hold]](https://ep1.pinkbike.org/p2pb21485217/p2pb21485217.jpg)