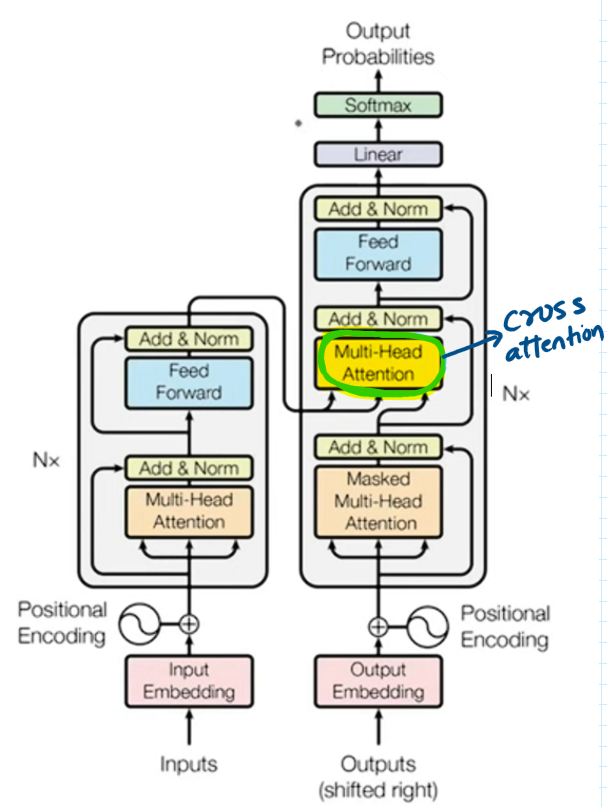

This multi head attention block is different than other blocks because here the input is coming from the encoder as well as the decoder.

This multi head attention block is different than other blocks because here the input is coming from the encoder as well as the decoder.Continue reading on Medium » Read More Llm on Medium

#AI